Ally Hirschlag, BBC Future

17 fevereiro 2026

Em março de 2025, uma frota de 30 aviões e drones lançou partículas de iodeto de prata no céu do norte da China. Ao atingirem o ar, o pó amarelo-pálido em seu interior emergiu e logo se transformou em “fios” acinzentados, entrelaçando o céu enquanto as aeronaves as liberavam em padrões cruzados. Muito abaixo delas, mais de 250 geradores terrestres lançavam foguetes com as mesmas partículas.

O objetivo era trazer alívio à seca nas regiões norte e noroeste, conhecidas como o cinturão de grãos do país. A grande operação foi o projeto “chuva de primavera”, conduzido pela Administração Meteorológica da China, e planejada para beneficiar as plantações no início da temporada de plantio.

A enorme operação foi aparentemente um sucesso, tendo supostamente produzido 31 milhões de toneladas adicionais de precipitação em 10 regiões suscetíveis à seca.

A China tenta aumentar artificialmente seus índices de chuva desde a década de 1950 por meio de um método conhecido, embora ainda controverso: a semeadura de nuvens.

Esse método busca estimular as nuvens a produzir mais umidade com o uso de partículas minúsculas, geralmente de iodeto de prata, cuja forma e peso são semelhantes aos de uma partícula de gelo.

A semeadura de nuvens há muito tempo gera preocupações, que vão desde os possíveis riscos ambientais e os impactos dos produtos químicos utilizados até possíveis danos a populações em áreas vizinhas, decorrentes de alterações nos padrões de chuva, além de tensões de segurança que possam surgir como consequência.

E, mesmo enquanto o país mais populoso do mundo intensifica a prática, cientistas e especialistas continuam questionando o quanto ela realmente funciona.

Caminho para a chuva

Nos últimos anos, a China intensificou de forma significativa seus esforços de semeadura de nuvens, em grande parte graças ao avanço das tecnologias de drones e de radar. O país realiza hoje modificações climáticas em mais de 50% de seu território, principalmente para aumentar a precipitação, embora também esteja tentando reduzi-la em determinadas áreas.

A técnica chegou a ser empregada para gerenciar as condições meteorológicas em datas específicas, como nos Jogos Olímpicos de Pequim, em 2008, e nas comemorações do centenário do Partido Comunista Chinês, em 2021.

A modificação do clima se tornou “um projeto vital para o desenvolvimento científico das nuvens atmosféricas e dos recursos hídricos, servindo ao país e beneficiando o povo”, afirmou Li Jiming, diretor do Centro de Modificação do Clima da China, à época da operação “chuva de primavera” de 2025. “É um componente crucial para a construção de uma nação meteorológica forte”, acrescentou, ao destacar a necessidade de impulsionar a China “de grande protagonista na modificação artificial do clima a líder global”.

O crescente interesse da China em controlar a precipitação é óbvia: desde a década de 1950, o país vêm enfrentando secas cada vez mais frequentes e severas, com impactos sobre a agricultura e a economia do país.

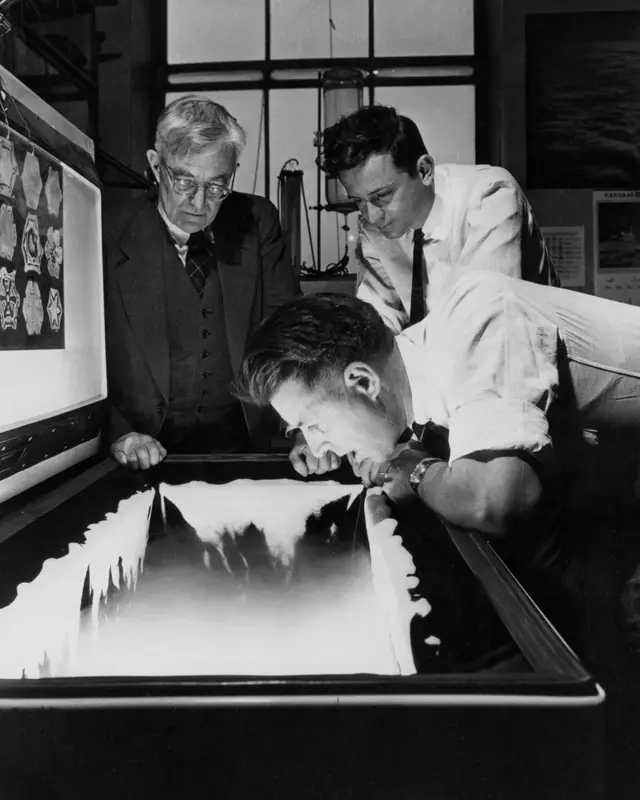

Os experimentos chineses com semeadura de nuvens começaram em 1958, quando uma aeronave supostamente teria provocado chuva sobre a província de Jilin, atingida pela seca. A técnica, porém, havia sido descoberta nos Estados Unidos uma década antes e, como tantas ideias inovadoras, totalmente por acaso.

Na década de 1940, Vincent Schaefer era pesquisador da General Electric e trabalhava para evitar que as aeronaves ficassem muito geladas durante o voo. Ele havia desenvolvido um refrigerador especial para demonstrar como o gelo se forma nas nuvens.

Um dia, ele chegou ao laboratório e descobriu que o equipamento havia desligado. Quando colocou um pedaço de gelo seco (dióxido de carbono sólido, em temperatura extremamente baixa) dentro dela para resfriar o interior, testemunhou uma reação surpreendente: cristais de gelo surgiram subitamente, flutuando dentro do compartimento. Ele havia produzido precipitação de forma artificial.

Um ano depois, em 1946, Schaefer lançou quilos de gelo seco sobre nuvens super resfriadas acima das montanhas Adirondack, no Estado de Nova York. O experimento aparentemente desencadeou uma queda de neve.

Depois dessa experiência, iniciativas de semeadura de nuvens surgiram ao redor do mundo, embora com resultados variados e inconclusivos, marcados por dificuldades na medição de dados.

Para demonstrar resultados efetivos da semeadura de nuvens, cientistas precisam de um cenário meteorológico de controle quase idêntico àquele em que tentam intervir na natureza. “Não conseguimos fazer a mesma nuvem acontecer duas vezes. Portanto, não podemos realizar um experimento controlado”, afirmou Robert Rauber, professor de ciências atmosféricas na Universidade de Illinois em Urbana-Champaign (EUA).

Semeadura de neve

Na China e em outras partes do mundo, a semeadura de nuvens, tanto para experimentos quanto para o uso prático, é realizada com mais frequência em áreas montanhosas para produzir neve, principalmente porque a neve é mais fácil de enxergar e medir do que a chuva.

Os cientistas usam radares para encontrar nuvens que contenham água líquida super-resfriada (entre -15°C e 0°C). Em seguida, liberam nelas partículas minúsculas de iodeto de prata por meio de aeronaves ou geradores instalados no solo. Essas partículas congelam ao entrar em contato com a água super-resfriada, formando cristais de gelo nas nuvens, que se tornam mais pesados e, por fim, caem no solo como neve ou gelo.

A semeadura de nuvens em clima quente funciona de maneira semelhante, mas utiliza sal para estimular pequenas gotículas de água a se unirem e aumentarem de tamanho até cair no solo. No entanto, é menos comum, porque nuvens mais quentes costumam se deslocar mais rapidamente e contêm menos água super-resfriada, além de a água não se acumular de forma tão visível quanto a neve, o que dificulta o monitoramento.

A primeira base operacional de semeadura de nuvens da China foi estabelecida em 2013, e hoje o país conta com seis bases que colaboram em pesquisas. Seu programa de modificação do clima é agora o maior do mundo, e as ambições de indução de chuvas cresceram na mesma proporção.

Em particular, a enorme iniciativa Tianhe (“rio do céu”, em tradução livre) do país, que visa criar um corredor de vapor de água do Planalto Tibetano até a região seca do norte da China, por meio de milhares de geradores instalados no solo.

Mas a China também enfrenta críticas diante de preocupações com os impactos mais amplos dessas operações. “Aplicadas em escala suficientemente grande, essas tecnologias de modificação climática podem representar riscos à habitabilidade e à segurança de países vizinhos”, disse Elizabeth Chalecki, pesquisadora em relações internacionais e governança tecnológica na Balsillie School of International Affairs (Canadá).

Um relatório recente argumentou que uma intervenção de tão grande escala no Planalto Tibetano poderia levar ao controle unilateral da China sobre recursos hídricos compartilhados com países vizinhos, como a Índia, levando a tensões geopolíticas. Por outro lado, uma análise ainda não publicada, baseada em 27 mil experimentos de semeadura de nuvens na China, concluiu que o impacto sobre outras nações foi mínimo.

Os potenciais danos da semeadura de nuvens podem ser exagerados, segundo Katja Friedrich, professora de ciências atmosféricas e oceânicas da Universidade do Colorado (EUA). Por exemplo, “não há indicação de que a semeadura de nuvens saia do controle e de repente você tenha essa explosão que gera uma tempestade”, disse ela em referência às inundações em Dubai, em 2024, e no Texas, em 2025, ambas erroneamente atribuídas à semeadura de nuvens.

Ainda assim, especialistas como Chalecki alertam para a ausência de políticas internacionais capazes de prevenir eventuais impactos transfronteiriços à medida que o programa chinês de modificação do clima avança. A China poderia até ser capaz de obter “um benefício de segurança auxiliar ao degradar discretamente o meio ambiente e a habitabilidade de um Estado rival”, sugere ela.

Falta de evidências

Há, no entanto, outro problema com a semeadura de nuvens: segundo cientistas, a China pode simplesmente não estar produzindo a quantidade de chuva que afirma gerar. “Acho que as alegações não são suficientemente sustentadas pelos dados”, afirmou Rauber, da Universidade de Illinois.

Na última década, o governo chinês divulgou repetidas vezes que seu programa de semeadura de nuvens estaria alcançando resultados expressivos. Um comunicado à imprensa afirmou que a iniciativa “chuva de primavera” de 2025 aumentou a precipitação na área-alvo em 20% em comparação com 2024. Já a agência meteorológica chinesa declarou, em dezembro de 2025, que as operações de chuva e neve artificial haviam produzido 168 bilhões de toneladas adicionais de precipitação (volume equivalente a cerca de 67 milhões de piscinas olímpicas) desde 2021.

“Há muitas alegações [globalmente], seja por parte de agências governamentais ou de empresas que podem se beneficiar de operações de semeadura de nuvens”, disse Jeffrey French, cientista atmosférico da Universidade do Wyoming (EUA). “Acho que há muitas declarações [vindas da China] que não podem ser validadas cientificamente nem comprovadas.”

Em 2017, French liderou um avanço significativo nas evidências sobre a técnica, quando o projeto “Snowie”, nas montanhas Payette, no Estado de Idaho (EUA), conseguiu coletar dados que demonstraram de forma inequívoca a produção de neve por meio da semeadura de nuvens. Desde então, os resultados repercutiram internacionalmente.

“Conseguimos, em diversos casos, identificar exatamente onde o material de semeadura estava nas nuvens e realizar medições diretamente nessas áreas”, afirmou French, pesquisador principal do projeto. Isso foi possível apesar de haver “tamanha variabilidade natural, tantas variações na natureza das nuvens e da precipitação”, disse.

Os pesquisadores também realizaram medições adicionais em áreas próximas, a 1 a 2 quilômetros de distância, o que permitiu comparar as duas regiões e demonstrar uma diferença clara entre a quantidade de neve produzida naturalmente e a gerada artificialmente pelo mesmo sistema de nuvens.

Foi o mais próximo que um estudo financiado de forma independente já chegou de um experimento controlado bem-sucedido na natureza. O extenso conjunto de dados do Snowie representou um marco: não apenas demonstrou que a semeadura de nuvens pode funcionar, mas também evidenciou o equilíbrio complexo de quando e como a técnica apresenta melhores resultados. Os dados viraram referência para um campo científico que carecia de comprovação empírica.

O estudo de referência foi citado em diversas pesquisas chinesas sobre semeadura de nuvens publicadas em periódicos com revisão por pares, incluindo uma que afirma que o trabalho “demonstra rigorosamente que a semeadura de nuvens realmente criou nuvens precipitantes e aumentou a precipitação na superfície”.

Resultados modestos

Ainda assim, os resultados do Snowie indicaram que o impacto da semeadura de nuvens é, no fim das contas, limitado. “É por isso que as pessoas tinham dificuldade em demonstrar o efeito nesses sistemas de precipitação”, disse Friedrich, da Universidade do Colorado. E, embora a técnica tenha sido comprovada em certa medida em outros contextos, até mesmo os cientistas que observaram os resultados de perto questionam se ela é eficaz o suficiente para justificar o esforço.

Alguns também avaliam que o uso da tecnologia avançou mais rápido do que a pesquisa científica, e que ainda não há dados confiáveis em quantidade suficiente para sustentar os resultados divulgados. “O problema desses programas de semeadura de nuvens é que a maioria é conduzida por governos, como na China ou nos Emirados Árabes Unidos”, disse Friedrich. “Mas há pouquíssima análise independente.”

Isso é relevante porque continua extremamente difícil distinguir entre a precipitação gerada pela intervenção e aquela que as nuvens produziriam naturalmente. “Em geral, é muito difícil saber se a semeadura de nuvens funciona em todos os casos”, afirmou Adele L. Igel, professora associada de física de nuvens na Universidade da Califórnia em Davis (EUA). “A teoria e a ciência indicam que deveria funcionar, mas é difícil verificar essas previsões de forma rotineira com observações e medições.”

Persistem ainda inúmeras limitações para que a técnica funcione de forma previsível. A semeadura de nuvens, por exemplo, não produz efeito se não houver nuvens com potencial de precipitação. Também é muito menos eficaz nos meses mais quentes, quando são raras as nuvens com água super-resfriada.

Isso significa que, em muitos casos, o custo pode superar os resultados, sobretudo quando se utilizam métodos aéreos. As técnicas baseadas em solo — que dependem de geradores que lançam iodeto de prata ou outro agente para as nuvens por meio de correntes de ar — são mais baratas, mas muito menos previsíveis. “A semeadura aérea é bastante eficiente, mas também muito cara, por isso as pessoas recorrem aos métodos terrestres”, disse Friedrich, da Universidade do Colorado.

Também é impossível prever com precisão quais serão os efeitos de modificações climáticas amplas e contínuas, seja na China ou em outros países. “É muito difícil avaliar, quanto mais prever, impactos climáticos regionais e anomalias remotas decorrentes de operações de modificação do tempo”, disse Manon Simon, professora da Universidade da Tasmânia (Austrália), que pesquisou extensivamente as implicações geopolíticas potenciais do programa chinês. Segundo ela, é particularmente complexo determinar se programas de longo prazo podem resultar em secas ou inundações mais frequentes ou intensas. A identificação desses riscos, acrescenta, exige monitoramento permanente e ampla cooperação internacional.

Uma nova fronteira

Nos quase dez anos desde o projeto Snowie, as técnicas de semeadura e as tecnologias de radar evoluíram, o que pode significar maior produção de precipitação. Com o avanço recente dos drones, a China ampliou o uso de equipamentos mais sofisticados e passou a recorrer à inteligência artificial (IA) para aumentar a precisão na liberação de iodeto de prata.

China e Emirados Árabes Unidos também experimentam métodos como o flare seeding (semeadura com sinalizadores, em tradução livre) e o envio de cargas de íons negativos às nuvens para estimular a união de gotículas, processo que leva à precipitação.

Ainda assim, como ocorre com a semeadura tradicional, permanece escassa a pesquisa independente que comprove de forma conclusiva que esses novos métodos produzem mais chuva. Os cientistas temem que o aumento das secas no mundo, impulsionado pelas mudanças climáticas, acelere a adoção da tecnologia sem que haja, na mesma proporção, estudos que indiquem quando e onde ela funciona com bom custo-benefício.

Os especialistas concordam que mais dados independentes ajudariam a identificar em que circunstâncias a semeadura pode surtir efeito e quando é improvável que funcione. As mesmas informações poderiam orientar medidas de proteção para proteger países vizinhos de eventuais impactos adversos.

Tudo isso, porém, demanda tempo, um argumento difícil de sustentar quando a escassez de água já é realidade, e muitos países buscam soluções imediatas.

/i.s3.glbimg.com/v1/AUTH_7d5b9b5029304d27b7ef8a7f28b4d70f/internal_photos/bs/2025/Z/R/jHGOaLRVmfBqpELroDXw/whatsapp-image-2025-08-26-at-22.10.40.jpeg)

/i.s3.glbimg.com/v1/AUTH_7d5b9b5029304d27b7ef8a7f28b4d70f/internal_photos/bs/2021/Y/d/BzqpiBStKFueHe2zQUHA/whatsapp-image-2021-07-06-at-19.51.23.jpeg)

/i.s3.glbimg.com/v1/AUTH_7d5b9b5029304d27b7ef8a7f28b4d70f/internal_photos/bs/2021/U/4/INBnzSReApcHqyezKRsg/gettyimages-1320365720.jpg)

/i.s3.glbimg.com/v1/AUTH_7d5b9b5029304d27b7ef8a7f28b4d70f/internal_photos/bs/2022/D/g/SkafXvSy2VuhehPbXZbQ/garimpo-ilegal-na-terra-indigena-yanomami-christian-braga-greenpeace.jpg)

/i.s3.glbimg.com/v1/AUTH_59edd422c0c84a879bd37670ae4f538a/internal_photos/bs/2024/X/M/Dl1Xb3TGWoLV5ut4v8AA/sineia.jpeg)

/i.s3.glbimg.com/v1/AUTH_59edd422c0c84a879bd37670ae4f538a/internal_photos/bs/2024/0/w/XEeo2dSceTVoGURwBlfQ/planetary.jpeg)

/cloudfront-eu-central-1.images.arcpublishing.com/prisa/KAZHWIUCH5FM5JORPAPL5JTWWE.jpg)

Você precisa fazer login para comentar.