Illustration by Pete Gamlen

Philosophers and scientists have been at war for decades over the question of what makes human beings more than complex robots

Wed 21 Jan 2015 06.00 GMTShare

One spring morning in Tucson, Arizona, in 1994, an unknown philosopher named David Chalmers got up to give a talk on consciousness, by which he meant the feeling of being inside your head, looking out – or, to use the kind of language that might give a neuroscientist an aneurysm, of having a soul. Though he didn’t realise it at the time, the young Australian academic was about to ignite a war between philosophers and scientists, by drawing attention to a central mystery of human life – perhaps the central mystery of human life – and revealing how embarrassingly far they were from solving it.

The scholars gathered at the University of Arizona – for what would later go down as a landmark conference on the subject – knew they were doing something edgy: in many quarters, consciousness was still taboo, too weird and new agey to take seriously, and some of the scientists in the audience were risking their reputations by attending. Yet the first two talks that day, before Chalmers’s, hadn’t proved thrilling. “Quite honestly, they were totally unintelligible and boring – I had no idea what anyone was talking about,” recalled Stuart Hameroff, the Arizona professor responsible for the event. “As the organiser, I’m looking around, and people are falling asleep, or getting restless.” He grew worried. “But then the third talk, right before the coffee break – that was Dave.” With his long, straggly hair and fondness for all-body denim, the 27-year-old Chalmers looked like he’d got lost en route to a Metallica concert. “He comes on stage, hair down to his butt, he’s prancing around like Mick Jagger,” Hameroff said. “But then he speaks. And that’s when everyone wakes up.”

The brain, Chalmers began by pointing out, poses all sorts of problems to keep scientists busy. How do we learn, store memories, or perceive things? How do you know to jerk your hand away from scalding water, or hear your name spoken across the room at a noisy party? But these were all “easy problems”, in the scheme of things: given enough time and money, experts would figure them out. There was only one truly hard problem of consciousness, Chalmers said. It was a puzzle so bewildering that, in the months after his talk, people started dignifying it with capital letters – the Hard Problem of Consciousness – and it’s this: why on earth should all those complicated brain processes feel like anything from the inside? Why aren’t we just brilliant robots, capable of retaining information, of responding to noises and smells and hot saucepans, but dark inside, lacking an inner life? And how does the brain manage it? How could the 1.4kg lump of moist, pinkish-beige tissue inside your skull give rise to something as mysterious as the experience of being that pinkish-beige lump, and the body to which it is attached?

What jolted Chalmers’s audience from their torpor was how he had framed the question. “At the coffee break, I went around like a playwright on opening night, eavesdropping,” Hameroff said. “And everyone was like: ‘Oh! The Hard Problem! The Hard Problem! That’s why we’re here!’” Philosophers had pondered the so-called “mind-body problem” for centuries. But Chalmers’s particular manner of reviving it “reached outside philosophy and galvanised everyone. It defined the field. It made us ask: what the hell is this that we’re dealing with here?”

Two decades later, we know an astonishing amount about the brain: you can’t follow the news for a week without encountering at least one more tale about scientists discovering the brain region associated with gambling, or laziness, or love at first sight, or regret – and that’s only the research that makes the headlines. Meanwhile, the field of artificial intelligence – which focuses on recreating the abilities of the human brain, rather than on what it feels like to be one – has advanced stupendously. But like an obnoxious relative who invites himself to stay for a week and then won’t leave, the Hard Problem remains. When I stubbed my toe on the leg of the dining table this morning, as any student of the brain could tell you, nerve fibres called “C-fibres” shot a message to my spinal cord, sending neurotransmitters to the part of my brain called the thalamus, which activated (among other things) my limbic system. Fine. But how come all that was accompanied by an agonising flash of pain? And what is pain, anyway?

Questions like these, which straddle the border between science and philosophy, make some experts openly angry. They have caused others to argue that conscious sensations, such as pain, don’t really exist, no matter what I felt as I hopped in anguish around the kitchen; or, alternatively, that plants and trees must also be conscious. The Hard Problem has prompted arguments in serious journals about what is going on in the mind of a zombie, or – to quote the title of a famous 1974 paper by the philosopher Thomas Nagel – the question “What is it like to be a bat?” Some argue that the problem marks the boundary not just of what we currently know, but of what science could ever explain. On the other hand, in recent years, a handful of neuroscientists have come to believe that it may finally be about to be solved – but only if we are willing to accept the profoundly unsettling conclusion that computers or the internet might soon become conscious, too.

Next week, the conundrum will move further into public awareness with the opening of Tom Stoppard’s new play, The Hard Problem, at the National Theatre – the first play Stoppard has written for the National since 2006, and the last that the theatre’s head, Nicholas Hytner, will direct before leaving his post in March. The 77-year-old playwright has revealed little about the play’s contents, except that it concerns the question of “what consciousness is and why it exists”, considered from the perspective of a young researcher played by Olivia Vinall. Speaking to the Daily Mail, Stoppard also clarified a potential misinterpretation of the title. “It’s not about erectile dysfunction,” he said.

Stoppard’s work has long focused on grand, existential themes, so the subject is fitting: when conversation turns to the Hard Problem, even the most stubborn rationalists lapse quickly into musings on the meaning of life. Christof Koch, the chief scientific officer at the Allen Institute for Brain Science, and a key player in the Obama administration’s multibillion-dollar initiative to map the human brain, is about as credible as neuroscientists get. But, he told me in December: “I think the earliest desire that drove me to study consciousness was that I wanted, secretly, to show myself that it couldn’t be explained scientifically. I was raised Roman Catholic, and I wanted to find a place where I could say: OK, here, God has intervened. God created souls, and put them into people.” Koch assured me that he had long ago abandoned such improbable notions. Then, not much later, and in all seriousness, he said that on the basis of his recent research he thought it wasn’t impossible that his iPhone might have feelings.

In all seriousness, Koch said he thought it wasn’t impossible that his iPhone might have feelings

By the time Chalmers delivered his speech in Tucson, science had been vigorously attempting to ignore the problem of consciousness for a long time. The source of the animosity dates back to the 1600s, when René Descartes identified the dilemma that would tie scholars in knots for years to come. On the one hand, Descartes realised, nothing is more obvious and undeniable than the fact that you’re conscious. In theory, everything else you think you know about the world could be an elaborate illusion cooked up to deceive you – at this point, present-day writers invariably invoke The Matrix – but your consciousness itself can’t be illusory. On the other hand, this most certain and familiar of phenomena obeys none of the usual rules of science. It doesn’t seem to be physical. It can’t be observed, except from within, by the conscious person. It can’t even really be described. The mind, Descartes concluded, must be made of some special, immaterial stuff that didn’t abide by the laws of nature; it had been bequeathed to us by God.

This religious and rather hand-wavy position, known as Cartesian dualism, remained the governing assumption into the 18th century and the early days of modern brain study. But it was always bound to grow unacceptable to an increasingly secular scientific establishment that took physicalism – the position that only physical things exist – as its most basic principle. And yet, even as neuroscience gathered pace in the 20th century, no convincing alternative explanation was forthcoming. So little by little, the topic became taboo. Few people doubted that the brain and mind were very closely linked: if you question this, try stabbing your brain repeatedly with a kitchen knife, and see what happens to your consciousness. But how they were linked – or if they were somehow exactly the same thing – seemed a mystery best left to philosophers in their armchairs. As late as 1989, writing in the International Dictionary of Psychology, the British psychologist Stuart Sutherland could irascibly declare of consciousness that “it is impossible to specify what it is, what it does, or why it evolved. Nothing worth reading has been written on it.”

It was only in 1990 that Francis Crick, the joint discoverer of the double helix, used his position of eminence to break ranks. Neuroscience was far enough along by now, he declared in a slightly tetchy paper co-written with Christof Koch, that consciousness could no longer be ignored. “It is remarkable,” they began, “that most of the work in both cognitive science and the neurosciences makes no reference to consciousness” – partly, they suspected, “because most workers in these areas cannot see any useful way of approaching the problem”. They presented their own “sketch of a theory”, arguing that certain neurons, firing at certain frequencies, might somehow be the cause of our inner awareness – though it was not clear how.

“People thought I was crazy to be getting involved,” Koch recalled. “A senior colleague took me out to lunch and said, yes, he had the utmost respect for Francis, but Francis was a Nobel laureate and a half-god and he could do whatever he wanted, whereas I didn’t have tenure yet, so I should be incredibly careful. Stick to more mainstream science! These fringey things – why not leave them until retirement, when you’re coming close to death, and you can worry about the soul and stuff like that?”

It was around this time that David Chalmers started talking about zombies.

As a child, Chalmers was short-sighted in one eye, and he vividly recalls the day he was first fitted with glasses to rectify the problem. “Suddenly I had proper binocular vision,” he said. “And the world just popped out. It was three-dimensional to me in a way it hadn’t been.” He thought about that moment frequently as he grew older. Of course, you could tell a simple mechanical story about what was going on in the lens of his glasses, his eyeball, his retina, and his brain. “But how does that explain the way the world just pops out like that?” To a physicalist, the glasses-eyeball-retina story is the only story. But to a thinker of Chalmers’s persuasion, it was clear that it wasn’t enough: it told you what the machinery of the eye was doing, but it didn’t begin to explain that sudden, breathtaking experience of depth and clarity. Chalmers’s “zombie” thought experiment is his attempt to show why the mechanical account is not enough – why the mystery of conscious awareness goes deeper than a purely material science can explain.

“Look, I’m not a zombie, and I pray that you’re not a zombie,” Chalmers said, one Sunday before Christmas, “but the point is that evolution could have produced zombies instead of conscious creatures – and it didn’t!” We were drinking espressos in his faculty apartment at New York University, where he recently took up a full-time post at what is widely considered the leading philosophy department in the Anglophone world; boxes of his belongings, shipped over from Australia, lay unpacked around his living-room. Chalmers, now 48, recently cut his hair in a concession to academic respectability, and he wears less denim, but his ideas remain as heavy-metal as ever. The zombie scenario goes as follows: imagine that you have a doppelgänger. This person physically resembles you in every respect, and behaves identically to you; he or she holds conversations, eats and sleeps, looks happy or anxious precisely as you do. The sole difference is that the doppelgänger has no consciousness; this – as opposed to a groaning, blood-spattered walking corpse from a movie – is what philosophers mean by a “zombie”.

Such non-conscious humanoids don’t exist, of course. (Or perhaps it would be better to say that I know I’m not one, anyhow; I could never know for certain that you aren’t.) But the point is that, in principle, it feels as if they could. Evolution might have produced creatures that were atom-for-atom the same as humans, capable of everything humans can do, except with no spark of awareness inside. As Chalmers explained: “I’m talking to you now, and I can see how you’re behaving; I could do a brain scan, and find out exactly what’s going on in your brain – yet it seems it could be consistent with all that evidence that you have no consciousness at all.” If you were approached by me and my doppelgänger, not knowing which was which, not even the most powerful brain scanner in existence could tell us apart. And the fact that one can even imagine this scenario is sufficient to show that consciousness can’t just be made of ordinary physical atoms. So consciousness must, somehow, be something extra – an additional ingredient in nature.

Chalmers recently cut his hair and he wears less denim, but his ideas remain as heavy-metal as ever

It would be understating things a bit to say that this argument wasn’t universally well-received when Chalmers began to advance it, most prominently in his 1996 book The Conscious Mind. The withering tone of the philosopher Massimo Pigliucci sums up the thousands of words that have been written attacking the zombie notion: “Let’s relegate zombies to B-movies and try to be a little more serious about our philosophy, shall we?” Yes, it may be true that most of us, in our daily lives, think of consciousness as something over and above our physical being – as if your mind were “a chauffeur inside your own body”, to quote the spiritual author Alan Watts. But to accept this as a scientific principle would mean rewriting the laws of physics. Everything we know about the universe tells us that reality consists only of physical things: atoms and their component particles, busily colliding and combining. Above all, critics point out, if this non-physical mental stuff did exist, how could it cause physical things to happen – as when the feeling of pain causes me to jerk my fingers away from the saucepan’s edge?

Nonetheless, just occasionally, science has dropped tantalising hints that this spooky extra ingredient might be real. In the 1970s, at what was then the National Hospital for Nervous Diseases in London, the neurologist Lawrence Weiskrantz encountered a patient, known as “DB”, with a blind spot in his left visual field, caused by brain damage. Weiskrantz showed him patterns of striped lines, positioned so that they fell on his area of blindness, then asked him to say whether the stripes were vertical or horizontal. Naturally, DB protested that he could see no stripes at all. But Weiskrantz insisted that he guess the answers anyway – and DB got them right almost 90% of the time. Apparently, his brain was perceiving the stripes without his mind being conscious of them. One interpretation is that DB was a semi-zombie, with a brain like any other brain, but partially lacking the magical add-on of consciousness.

Chalmers knows how wildly improbable his ideas can seem, and takes this in his stride: at philosophy conferences, he is fond of clambering on stage to sing The Zombie Blues, a lament about the miseries of having no consciousness. (“I act like you act / I do what you do / But I don’t know / What it’s like to be you.”) “The conceit is: wouldn’t it be a drag to be a zombie? Consciousness is what makes life worth living, and I don’t even have that: I’ve got the zombie blues.” The song has improved since its debut more than a decade ago, when he used to try to hold a tune. “Now I’ve realised it sounds better if you just shout,” he said.

The consciousness debates have provoked more mudslinging and fury than most in modern philosophy, perhaps because of how baffling the problem is: opposing combatants tend not merely to disagree, but to find each other’s positions manifestly preposterous. An admittedly extreme example concerns the Canadian-born philosopher Ted Honderich, whose book On Consciousness was described, in an article by his fellow philosopher Colin McGinn in 2007, as “banal and pointless”, “excruciating”, “absurd”, running “the full gamut from the mediocre to the ludicrous to the merely bad”. McGinn added, in a footnote: “The review that appears here is not as I originally wrote it. The editors asked me to ‘soften the tone’ of the original [and] I have done so.” (The attack may have been partly motivated by a passage in Honderich’s autobiography, in which he mentions “my small colleague Colin McGinn”; at the time, Honderich told this newspaper he’d enraged McGinn by referring to a girlfriend of his as “not as plain as the old one”.)

McGinn, to be fair, has made a career from such hatchet jobs. But strong feelings only slightly more politely expressed are commonplace. Not everybody agrees there is a Hard Problem to begin with – making the whole debate kickstarted by Chalmers an exercise in pointlessness. Daniel Dennett, the high-profile atheist and professor at Tufts University outside Boston, argues that consciousness, as we think of it, is an illusion: there just isn’t anything in addition to the spongy stuff of the brain, and that spongy stuff doesn’t actually give rise to something called consciousness. Common sense may tell us there’s a subjective world of inner experience – but then common sense told us that the sun orbits the Earth, and that the world was flat. Consciousness, according to Dennett’s theory, is like a conjuring trick: the normal functioning of the brain just makes it look as if there is something non-physical going on. To look for a real, substantive thing called consciousness, Dennett argues, is as silly as insisting that characters in novels, such as Sherlock Holmes or Harry Potter, must be made up of a peculiar substance named “fictoplasm”; the idea is absurd and unnecessary, since the characters do not exist to begin with. This is the point at which the debate tends to collapse into incredulous laughter and head-shaking: neither camp can quite believe what the other is saying. To Dennett’s opponents, he is simply denying the existence of something everyone knows for certain: their inner experience of sights, smells, emotions and the rest. (Chalmers has speculated, largely in jest, that Dennett himself might be a zombie.) It’s like asserting that cancer doesn’t exist, then claiming you’ve cured cancer; more than one critic of Dennett’s most famous book, Consciousness Explained, has joked that its title ought to be Consciousness Explained Away. Dennett’s reply is characteristically breezy: explaining things away, he insists, is exactly what scientists do. When physicists first concluded that the only difference between gold and silver was the number of subatomic particles in their atoms, he writes, people could have felt cheated, complaining that their special “goldness” and “silveriness” had been explained away. But everybody now accepts that goldness and silveriness are really just differences in atoms. However hard it feels to accept, we should concede that consciousness is just the physical brain, doing what brains do.

“The history of science is full of cases where people thought a phenomenon was utterly unique, that there couldn’t be any possible mechanism for it, that we might never solve it, that there was nothing in the universe like it,” said Patricia Churchland of the University of California, a self-described “neurophilosopher” and one of Chalmers’s most forthright critics. Churchland’s opinion of the Hard Problem, which she expresses in caustic vocal italics, is that it is nonsense, kept alive by philosophers who fear that science might be about to eliminate one of the puzzles that has kept them gainfully employed for years. Look at the precedents: in the 17th century, scholars were convinced that light couldn’t possibly be physical – that it had to be something occult, beyond the usual laws of nature. Or take life itself: early scientists were convinced that there had to be some magical spirit – the élan vital – that distinguished living beings from mere machines. But there wasn’t, of course. Light is electromagnetic radiation; life is just the label we give to certain kinds of objects that can grow and reproduce. Eventually, neuroscience will show that consciousness is just brain states. Churchland said: “The history of science really gives you perspective on how easy it is to talk ourselves into this sort of thinking – that if my big, wonderful brain can’t envisage the solution, then it must be a really, really hard problem!”

Solutions have regularly been floated: the literature is awash in references to “global workspace theory”, “ego tunnels”, “microtubules”, and speculation that quantum theory may provide a way forward. But the intractability of the arguments has caused some thinkers, such as Colin McGinn, to raise an intriguing if ultimately defeatist possibility: what if we’re just constitutionally incapable of ever solving the Hard Problem? After all, our brains evolved to help us solve down-to-earth problems of survival and reproduction; there is no particular reason to assume they should be capable of cracking every big philosophical puzzle we happen to throw at them. This stance has become known as “mysterianism” – after the 1960s Michigan rock’n’roll band ? and the Mysterians, who themselves borrowed the name from a work of Japanese sci-fi – but the essence of it is that there’s actually no mystery to why consciousness hasn’t been explained: it’s that humans aren’t up to the job. If we struggle to understand what it could possibly mean for the mind to be physical, maybe that’s because we are, to quote the American philosopher Josh Weisberg, in the position of “squirrels trying to understand quantum mechanics”. In other words: “It’s just not going to happen.”

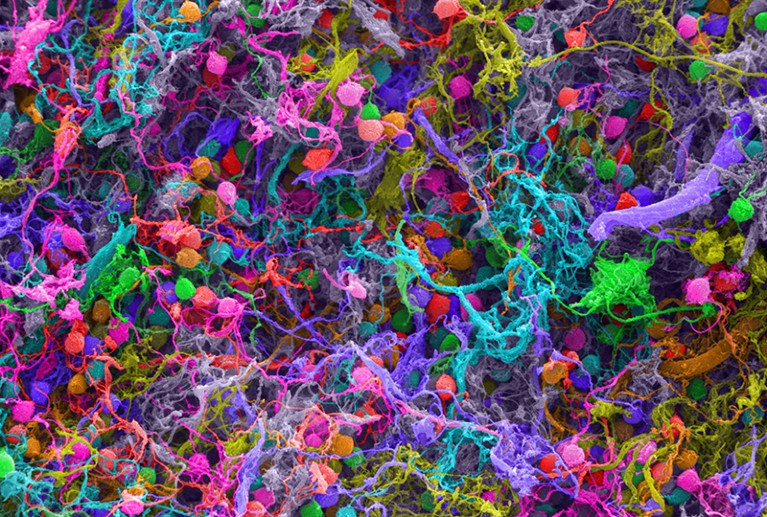

Or maybe it is: in the last few years, several scientists and philosophers, Chalmers and Koch among them, have begun to look seriously again at a viewpoint so bizarre that it has been neglected for more than a century, except among followers of eastern spiritual traditions, or in the kookier corners of the new age. This is “panpsychism”, the dizzying notion that everything in the universe might be conscious, or at least potentially conscious, or conscious when put into certain configurations. Koch concedes that this sounds ridiculous: when he mentions panpsychism, he has written, “I often encounter blank stares of incomprehension.” But when it comes to grappling with the Hard Problem, crazy-sounding theories are an occupational hazard. Besides, panpsychism might help unravel an enigma that has attached to the study of consciousness from the start: if humans have it, and apes have it, and dogs and pigs probably have it, and maybe birds, too – well, where does it stop?

Growing up as the child of German-born Catholics, Koch had a dachshund named Purzel. According to the church, because he was a dog, that meant he didn’t have a soul. But he whined when anxious and yelped when injured – “he certainly gave every appearance of having a rich inner life”. These days we don’t much speak of souls, but it is widely assumed that many non-human brains are conscious – that a dog really does feel pain when he is hurt. The problem is that there seems to be no logical reason to draw the line at dogs, or sparrows or mice or insects, or, for that matter, trees or rocks. Since we don’t know how the brains of mammals create consciousness, we have no grounds for assuming it’s only the brains of mammals that do so – or even that consciousness requires a brain at all. Which is how Koch and Chalmers have both found themselves arguing, in the pages of the New York Review of Books, that an ordinary household thermostat or a photodiode, of the kind you might find in your smoke detector, might in principle be conscious.

The argument unfolds as follows: physicists have no problem accepting that certain fundamental aspects of reality – such as space, mass, or electrical charge – just do exist. They can’t be explained as being the result of anything else. Explanations have to stop somewhere. The panpsychist hunch is that consciousness could be like that, too – and that if it is, there is no particular reason to assume that it only occurs in certain kinds of matter.

Koch’s specific twist on this idea, developed with the neuroscientist and psychiatrist Giulio Tononi, is narrower and more precise than traditional panpsychism. It is the argument that anything at all could be conscious, providing that the information it contains is sufficiently interconnected and organised. The human brain certainly fits the bill; so do the brains of cats and dogs, though their consciousness probably doesn’t resemble ours. But in principle the same might apply to the internet, or a smartphone, or a thermostat. (The ethical implications are unsettling: might we owe the same care to conscious machines that we bestow on animals? Koch, for his part, tries to avoid stepping on insects as he walks.)

Unlike the vast majority of musings on the Hard Problem, moreover, Tononi and Koch’s “integrated information theory” has actually been tested. A team of researchers led by Tononi has designed a device that stimulates the brain with electrical voltage, to measure how interconnected and organised – how “integrated” – its neural circuits are. Sure enough, when people fall into a deep sleep, or receive an injection of anaesthetic, as they slip into unconsciousness, the device demonstrates that their brain integration declines, too. Among patients suffering “locked-in syndrome” – who are as conscious as the rest of us – levels of brain integration remain high; among patients in coma – who aren’t – it doesn’t. Gather enough of this kind of evidence, Koch argues and in theory you could take any device, measure the complexity of the information contained in it, then deduce whether or not it was conscious.

But even if one were willing to accept the perplexing claim that a smartphone could be conscious, could you ever know that it was true? Surely only the smartphone itself could ever know that? Koch shrugged. “It’s like black holes,” he said. “I’ve never been in a black hole. Personally, I have no experience of black holes. But the theory [that predicts black holes] seems always to be true, so I tend to accept it.”

It would be satisfying for multiple reasons if a theory like this were eventually to vanquish the Hard Problem. On the one hand, it wouldn’t require a belief in spooky mind-substances that reside inside brains; the laws of physics would escape largely unscathed. On the other hand, we wouldn’t need to accept the strange and soulless claim that consciousness doesn’t exist, when it’s so obvious that it does. On the contrary, panpsychism says, it’s everywhere. The universe is throbbing with it.

Last June, several of the most prominent combatants in the consciousness debates – including Chalmers, Churchland and Dennett – boarded a tall-masted yacht for a trip among the ice floes of Greenland. This conference-at-sea was funded by a Russian internet entrepreneur, Dmitry Volkov, the founder of the Moscow Centre for Consciousness Studies. About 30 academics and graduate students, plus crew, spent a week gliding through dark waters, past looming snow-topped mountains and glaciers, in a bracing chill conducive to focused thought, giving the problem of consciousness another shot. In the mornings, they visited islands to go hiking, or examine the ruins of ancient stone huts; in the afternoons, they held conference sessions on the boat. For Chalmers, the setting only sharpened the urgency of the mystery: how could you feel the Arctic wind on your face, take in the visual sweep of vivid greys and whites and greens, and still claim conscious experience was unreal, or that it was simply the result of ordinary physical stuff, behaving ordinarily?

The question was rhetorical. Dennett and Churchland were not converted; indeed, Chalmers has no particular confidence that a consensus will emerge in the next century. “Maybe there’ll be some amazing new development that leaves us all, now, looking like pre-Darwinians arguing about biology,” he said. “But it wouldn’t surprise me in the least if in 100 years, neuroscience is incredibly sophisticated, if we have a complete map of the brain – and yet some people are still saying, ‘Yes, but how does any of that give you consciousness?’ while others are saying ‘No, no, no – that just is the consciousness!’” The Greenland cruise concluded in collegial spirits, and mutual incomprehension.

It would be poetic – albeit deeply frustrating – were it ultimately to prove that the one thing the human mind is incapable of comprehending is itself. An answer must be out there somewhere. And finding it matters: indeed, one could argue that nothing else could ever matter more – since anything at all that matters, in life, only does so as a consequence of its impact on conscious brains. Yet there’s no reason to assume that our brains will be adequate vessels for the voyage towards that answer. Nor that, were we to stumble on a solution to the Hard Problem, on some distant shore where neuroscience meets philosophy, we would even recognise that we’d found it.

Follow the Long Read on Twitter: @gdnlongread

- This article was amended on 21 January 2015. The conference-at-sea was funded by the Russian internet entrepreneur Dmitry Volkov, not Dmitry Itskov as was originally stated. This has been corrected.