Focar em soluções tecnológicas para mudanças climáticas parece uma tentativa para se desviar dos obstáculos políticos mais desafiadores.

By MIT Technology Review, 6 de abril de 2021

Em seu novo livro Como evitar um desastre climático, Bill Gates adota uma abordagem tecnológica para compreender a crise climática. Gates começa com os 51 bilhões de toneladas de gases com efeito de estufa criados por ano. Ele divide essa poluição em setores com base em seu impacto, passando pelo elétrico, industrial e agrícola para o de transporte e construção civil. Do começo ao fim, Gates se mostra adepto a diminuir as complexidades do desafio climático, dando ao leitor heurísticas úteis para distinguir maiores problemas tecnológicos (cimento) de menores (aeronaves).

Presente nas negociações climáticas de Paris em 2015, Gates e dezenas de indivíduos bem-afortunados lançaram o Breakthrough Energy, um fundo de capital de investimento interdependente lobista empenhado em conduzir pesquisas. Gates e seus companheiros investidores argumentaram que tanto o governo federal quanto o setor privado estão investindo pouco em inovação energética. A Breakthrough pretende preencher esta lacuna, investindo em tudo, desde tecnologia nuclear da próxima geração até carne vegetariana com sabor de carne bovina. A primeira rodada de US$ 1 bilhão do fundo de investimento teve alguns sucessos iniciais, como a Impossible Foods, uma fabricante de hambúrgueres à base de plantas. O fundo anunciou uma segunda rodada de igual tamanho em janeiro.

Um esforço paralelo, um acordo internacional chamado de Mission Innovation, diz ter convencido seus membros (o setor executivo da União Europeia junto com 24 países incluindo China, os EUA, Índia e o Brasil) a investirem um adicional de US$ 4,6 bilhões por ano desde 2015 para a pesquisa e desenvolvimento da energia limpa.

Essas várias iniciativas são a linha central para o livro mais recente de Gates, escrito a partir de uma perspectiva tecno-otimista. “Tudo que aprendi a respeito do clima e tecnologia me deixam otimista… se agirmos rápido o bastante, [podemos] evitar uma catástrofe climática,” ele escreveu nas páginas iniciais.

Como muitos já assinalaram, muito da tecnologia necessária já existe, muito pode ser feito agora. Por mais que Gates não conteste isso, seu livro foca nos desafios tecnológicos que ele acredita que ainda devem ser superados para atingir uma maior descarbonização. Ele gasta menos tempo nos percalços políticos, escrevendo que pensa “mais como um engenheiro do que um cientista político.” Ainda assim, a política, com toda a sua desordem, é o principal impedimento para o progresso das mudanças climáticas. E engenheiros devem entender como sistemas complexos podem ter ciclos de feedback que dão errado.

Sim, ministro

Kim Stanley Robinson, este sim pensa como um cientista político. O começo de seu romance mais recente The Ministry for the Future (ainda sem tradução para o português), se passa apenas a alguns anos no futuro, em 2025, quando uma onda de calor imensa atinge a Índia, matando milhões de pessoas. A protagonista do livro, Mary Murphy, comanda uma agência da ONU designada a representar os interesses das futuras gerações em uma tentativa de unir os governos mundiais em prol de uma solução climática. Durante todo o livro a equidade intergeracional e várias formas de políticas distributivas em foco.

Se você já viu os cenários que o Painel Intergovernamental sobre Mudanças Climáticas (IPCC) desenvolve para o futuro, o livro de Robinson irá parecer familiar. Sua história questiona as políticas necessárias para solucionar a crise climática, e ele certamente fez seu dever de casa. Apesar de ser um exercício de imaginação, há momentos em que o romance se assemelha mais a um seminário de graduação sobre ciências sociais do que a um trabalho de ficção escapista. Os refugiados climáticos, que são centrais para a história, ilustram a forma como as consequências da poluição atingem a população global mais pobre com mais força. Mas os ricos produzem muito mais carbono.

Ler Gates depois de Robinson evidencia a inextricável conexão entre desigualdade e mudanças climáticas. Os esforços de Gates sobre a questão do clima são louváveis. Mas quando ele nos diz que a riqueza combinada das pessoas apoiando seu fundo de investimento é de US$ 170 bilhões, ficamos um pouco intrigados que estes tenham dedicado somente US$ 2 bilhões para soluções climáticas, menos de 2% de seus ativos. Este fato por si só é um argumento favorável para taxar fortunas: a crise climática exige ação governamental. Não pode ser deixado para o capricho de bilionários.

Quanto aos bilionários, Gates é possivelmente um dos bonzinhos. Ele conta histórias sobre como usa sua fortuna para ajudar os pobres e o planeta. A ironia dele escrever um livro sobre mudanças climáticas quando voa em um jato particular e detém uma mansão de 6.132 m² não é algo que passa despercebido pelo leitor, e nem por Gates, que se autointitula um “mensageiro imperfeito sobre mudanças climáticas”. Ainda assim, ele é inquestionavelmente um aliado do movimento climático.

Mas ao focar em inovações tecnológicas, Gates minimiza a participação dos combustíveis fósseis na obstrução deste progresso. Peculiarmente, o ceticismo climático não é mencionado no livro. Lavando as mãos no que diz respeito à polarização política, Gates nunca faz conexão com seus colegas bilionários Charles e David Koch, que enriqueceram com os petroquímicos e têm desempenhado papel de destaque na reprodução do negacionismo climático.

Por exemplo, Gates se admira que para a vasta maioria dos americanos aquecedores elétricos são na verdade mais baratos do que continuar a usar combustíveis fósseis. Para ele, as pessoas não adotarem estas opções mais econômicas e sustentáveis é um enigma. Mas, não é assim. Como os jornalistas Rebecca Leber e Sammy Roth reportaram em Mother Jones e no Los Angeles Times, a indústria do gás está investindo em defensores e criando campanhas de marketing para se opor à eletrificação e manter as pessoas presas aos combustíveis fósseis.

Essas forças de oposição são melhor vistas no livro do Robinson do que no de Gates. Gates teria se beneficiado se tivesse tirado partido do trabalho que Naomi Oreskes, Eric Conway, Geoffrey Supran, entre outros, têm feito para documentar os esforços persistentes das empresas de combustíveis fósseis em semear dúvida sobre a ciência climática para a população.

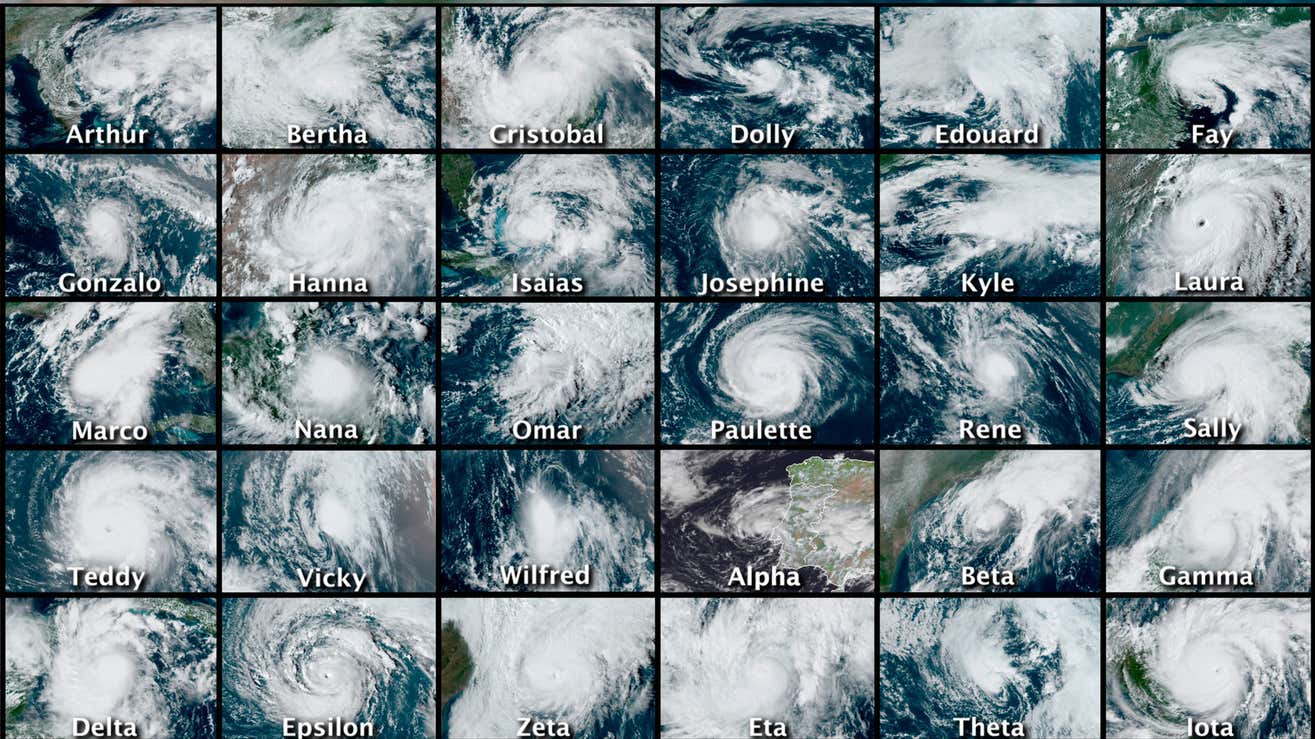

No entanto, uma coisa que Gates e Robinson têm em comum é a opinião de que a geoengenharia, intervenções monumentais para combater os sintomas ao invés das causas das mudanças climáticas, venha a ser inevitável. Em The Ministry for the Future, a geoengenharia solar, que vem a ser a pulverização de partículas finas na atmosfera para refletir mais do calor solar de volta para o espaço, é usada na sequência dos acontecimentos da onda de calor mortal que inicia a história. E mais tarde, alguns cientistas vão aos polos e inventam elaborados métodos para remover água derretida de debaixo de geleiras para evitar que avançasse para o mar. Apesar de alguns contratempos, eles impedem a subida do nível do mar em vários metros. É possível imaginar Gates aparecendo no romance como um dos primeiros a financiar estes esforços. Como ele próprio observa em seu livro, ele tem investido em pesquisa sobre geoengenharia solar há anos.

A pior parte

O título do novo livro de Elizabeth Kolbert, Under a White Sky (ainda sem tradução para o português), é uma referência a esta tecnologia nascente, já que implementá-la em larga escala pode alterar a cor do céu de azul para branco.

Kolbert observa que o primeiro relatório sobre mudanças climáticas foi parar na mesa do presidente Lyndon Johnson em 1965. Este relatório não argumentava que deveríamos diminuir as emissões de carbono nos afastando de combustíveis fósseis. No lugar, defendia mudar o clima por meio da geoengenharia solar, apesar do termo ainda não ter sido inventado. É preocupante que alguns se precipitem imediatamente para essas soluções arriscadas em vez de tratar a raiz das causas das mudanças climáticas.

Ao ler Under a White Sky, somos lembrados das formas com que intervenções como esta podem dar errado. Por exemplo, a cientista e escritora Rachel Carson defendeu importar espécies não nativas como uma alternativa a utilizar pesticidas. No ano após o seu livro Primavera Silenciosa ser publicado, em 1962, o US Fish and Wildlife Service trouxe carpas asiáticas para a América pela primeira vez, a fim de controlar algas aquáticas. Esta abordagem solucionou um problema, mas criou outro: a disseminação dessa espécie invasora ameaçou às locais e causou dano ambiental.

Como Kolbert observa, seu livro é sobre “pessoas tentando solucionar problemas criados por pessoas tentando solucionar problemas.” Seu relato cobre exemplos incluindo esforços malfadados de parar a disseminação das carpas, as estações de bombeamento em Nova Orleans que aceleram o afundamento da cidade e as tentativas de seletivamente reproduzir corais que possam tolerar temperaturas mais altas e a acidificação do oceano. Kolbert tem senso de humor e uma percepção aguçada para consequências não intencionais. Se você gosta do seu apocalipse com um pouco de humor, ela irá te fazer rir enquanto Roma pega fogo.

Em contraste, apesar de Gates estar consciente das possíveis armadilhas das soluções tecnológicas, ele ainda enaltece invenções como plástico e fertilizante como vitais. Diga isso para as tartarugas marinhas engolindo lixo plástico ou as florações de algas impulsionadas por fertilizantes destruindo o ecossistema do Golfo do México.

Com níveis perigosos de dióxido de carbono na atmosfera, a geoengenharia pode de fato se provar necessária, mas não deveríamos ser ingênuos sobre os riscos. O livro de Gates tem muitas ideias boas e vale a pena a leitura. Mas para um panorama completo da crise que enfrentamos, certifique-se de também ler Robinson e Kolbert.

Você precisa fazer login para comentar.