Monday, July 9, 2012

Paul Voosen, E&E reporter

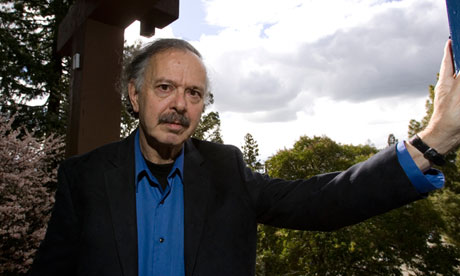

Jon Krosnick has seen the frustration etched into the faces of climate scientists.

For 15 years, Krosnick has charted the rising public belief in global warming. Yet, as the field’s implications became clearer, action has remained elusive. Science seemed to hit the limits of its influence. It is a result that has prompted some researchers to cross their world’s no man’s land — from advice to activism.

As Krosnick has watched climate scientists call for government action, he began pondering a recent small dip in the public’s belief. And he wondered: Could researchers’ move into the political world be undermining their scientific message?

|

|

Stanford’s Jon Krosnick has been studying the public’s belief in climate change for 15 years, but only recently did he decide to probe their reaction to scientists’ advocacy. Photo courtesy of Jon Krosnick.

|

“What if a message involves two different topics, one trustworthy and one not trustworthy?” said Krosnick, a communication and psychology professor at Stanford University. “Can the general public detect crossing that line?”

His results, not yet published, would seem to say they can.

Using a national survey, Krosnick has found that, among low-income and low-education respondents, climate scientists suffered damage to their trustworthiness and credibility when they veered from describing science into calling viewers to ask the government to halt global warming. And not only did trust in the messenger fall — even the viewers’ belief in the reality of human-caused warming dropped steeply.

It is a warning that, even as the frustration of inaction mounts and the politicization of climate science deepens, researchers must be careful in getting off the political sidelines.

“The advice that comes out of this work is that all of us, when we claim to have expertise and offer opinions on matters [in the world], need to be guarded about how far we’re willing to go,” Krosnick said. Speculation, he added, “could compromise everything.”

Krosnick’s survey is just the latest social science revelation that has reordered how natural scientists understand their role in the world. Many of these lessons have stemmed from the public’s and politicians’ reactions to climate change, which has provided a case study of how science communication works and doesn’t work. Complexity, these researchers have found, does not stop at their discipline’s verge.

For decades, most members of the natural sciences held a simple belief that the public stood lost, holding out empty mental buckets for researchers to fill with knowledge, if they could only get through to them. But, it turns out, not only are those buckets already full with a mix of ideology and cultural belief, but it is incredibly fraught, and perhaps ineffective, for scientists to suggest where those contents should be tossed.

It’s been a difficult lesson for researchers.

“Many of us have been saddened that the world has done so little about it,” said Richard Somerville, a meteorologist at the Scripps Institution of Oceanography and former author of the United Nations’ authoritative report on climate change.

“A lot of physical climate scientists, myself included, have in the past not been knowledgeable about what the social sciences have been saying,” he added. “People who know a lot about the science of communication … [are] on board now. But we just don’t see that reflected in the policy process.”

While not as outspoken as NASA’s James Hansen, who has taken a high-profile moral stand alongside groups like 350.org and Greenpeace, Somerville has been a leader in bringing scientists together to call for greenhouse gas reductions. He helped organize the 2007 Bali declaration, a pointed letter from more than 200 scientists urging negotiators to limit global CO2 levels well below 450 parts per million.

Such declarations, in the end, have done little, Somerville said.

“If you look at the effect this has had on the policy process, it is very, very small,” he said.

This failed influence has spurred scientists like Somerville to partner closely with social scientists, seeking to understand why their message has failed. It is an effort that received a seal of approval this spring, when the National Academy of Sciences, the nation’s premier research body, hosted a two-day meeting on the science of science communication. Many of those sessions pivoted on public views of climate change.

It’s a discussion that’s been long overdue. When it comes to how the public learns about expert opinions, assumptions mostly rule in the sciences, said Dan Kahan, a professor of law and psychology at Yale Law School.

“Scientists are filled with conjectures that are plausible about how people make sense about information,” Kahan said, “only some fraction of which [are] correct.”

Shifting dynamic

Krosnick’s work began with a simple, hypothetical scene: NASA’s Hansen, whose scientific work on climate change is widely respected, walks into the Oval Office.

As he has since the 1980s, Hansen rattles off the inconvertible, ever-increasing evidence of human-caused climate change. It’s a stunning litany, authoritative in scope, and one the fictional president — be it a Bush or an Obama — must judge against Hansen’s scientific credentials, backed by publications and institutions of the highest order. If Hansen stops there, one might think, the case is made.

But he doesn’t stop. Hansen continues, arguing, as a citizen, for an immediate carbon tax.

“Whoa, there!” Krosnick’s president might think. “He’s crossed into my domain, and he’s out of touch with how policy works.” And if Hansen is willing to offer opinions where he lacks expertise, the president starts to wonder: “Can I trust any of his work?”

|

|

Part of Scripps’ legendary climate team — Charles David Keeling was an early mentor — Richard Somerville helped organize the 2007 Bali declaration by climate scientists, calling for government action on CO2 emissions. Photo by Sylvia Bal Somerville.

|

Researchers have studied the process of persuasion for 50 years, Krosnick said. Over that time, a few vital truths have emerged, including that trust in a source matters. But looking back over past work, Krosnick found no answer to this question. The treatment was simplistic. Messengers were either trustworthy or not. No one had considered the case of two messages, one trusted and one shaky, from the same person.

The advocacy of climate scientists provided an excellent path into this shifting dynamic.

Krosnick’s team hunted down video of climate scientists first discussing the science of climate change and then, in the same interview, calling for viewers to pressure the government to act on global warming. (Out of fears of bruised feelings, Krosnick won’t disclose the specific scientists cited.) They cut the video in two edits: one showing only the science, and one showing the science and then the call to arms.

Krosnick then showed a nationally representative sample of 793 Americans one of three videos: the science-only cut, the science and political cut, and a control video about baking meatloaf (The latter being closer to politics than Krosnick might admit). The viewers were then asked a series of questions both about their opinion of the scientist’s credibility and their overall beliefs on global warming.

For a cohort of 548 respondents who either had a household income under $50,000 or no more than a high school diploma, the results were stunning and statistically significant. Across the board, the move into politics undermined the science.

The viewers’ trust in the scientist dropped 16 percentage points, from 48 to 32 percent. Their belief in the scientist’s accuracy fell from 47 to 36 percent. Their overall trust in all scientists went from 60 to 52 percent. Their belief that government should “do a lot” to stop warming fell from 62 to 49 percent. And their belief that humans have caused climate change fell 14 percentage points, from 81 to 67 percent.

Krosnick is quick to note the study’s caveats. First, educated or wealthy viewers had no significant reaction to the political call and seemed able to parse the difference between science and a personal political view. The underlying reasons for the drop are far from clear, as well — it could simply be a function of climate change’s politicization. And far more testing needs to be done to see whether this applies in other contexts.

With further evidence, though, the implications could be widespread, Krosnick said.

“Is it the case that the principle might apply broadly?” he asked. “Absolutely.”

‘Fraught with misadventure’

Krosnick’s study is likely rigorous and useful — he is known for his careful methods — but it still carries with it a simple, possibly misleading frame, several scientists said.

Most of all, it remains hooked to a premise that words float straight from the scientist’s lips to the public’s ears. The idea that people learn from scientists at all or that they are simply misunderstanding scientific conclusions is not how reality works, Yale’s Kahan said.

“The thing that goes into the ear is fraught with misadventure,” he said.

Kahan has been at the forefront of charting how the empty-bucket theory of science communication — called the deficit model — fails. People interpret new information within the context of their own cultural beliefs, peers and politics. They use their reasoning to pick the evidence that supports their views, rather than the other way around. Indeed, recent work by Kahan found that higher-educated respondents were more likely to be polarized than their less-educated peers.

Krosnick’s study will surely spur new investigations, Kahan said, though he resisted definite remarks until he could see the final work. If the study’s conditions aren’t realistic, even a simple model can have “plenty of implications for all kinds of ways of which people become exposed to science,” he said.

The survey sits well with other research in the field and carries an implication about what role scientists should play in scientific debates, added Matthew Nisbet, a communication professor at American University.

“As soon as you start talking about a policy option, you’re presenting information that is potentially threatening to people’s values or identity,” he said. The public, he added, doesn’t “view scientists and scientific information in a vacuum.”

The deficit model has remained an enduring frame for scientists, many of whom are just becoming aware of social science work on the problem. Kahan compares it to the stages of grief. The first stage was that the truth just needs to be broadcast to change minds. The second, and one still influential in the scientific world, is that if the message is just simplified, the right images used, than the deficit will be filled.

“That too, I think, is a stage of misperception about how this works,” Kahan said.

Take the hand-wringing about science education that accompanied a recent poll finding that 46 percent of the United States believed in a creationist origin for humans. It’s a result that speaks to belief, not an understanding of evolution. Many surveyed who believed in evolution would still fail to explain natural selection, mutation or genetic variance, Kahan said, just as they don’t have to understand relativity to use their GPS.

Much of science doesn’t run up against the public’s belief systems and is accepted with little fuss. It’s not as if Louis Pasteur had to sell pasteurization by using slick images of children getting sick; for nearly all of society, it was simply a useful tool. People want to defer to the experts, as long as they don’t have to concede their beliefs on the way.

“People know what’s known without having a comprehension of why that’s the truth,” Kahan said.

There remains a danger in the emerging consensus that all scientific knowledge is filtered by the motivated reasoning of political and cultural ideology, Nisbet added. Not all people can be sorted by two, or even four, variables.

“In the new ideological deficit model, we tend to assume that failures in communication are caused by conservative media and conservative psychology,” he said. “The danger in this model is that we define the public in exclusively binary terms, as liberals versus conservatives, deniers versus believers.”

‘Crossing that line’

So why do climate scientists, more than most fields, cross the line into advocacy?

Most of all, it’s because their scientific work tells them the problem is so pressing, and time dependent, given the centuries-long life span of CO2 emissions, Somerville said.

“You get to the point where the emissions are large enough that you’ve run out of options,” he said. “You can no longer limit [it]. … We may be at that point already.”

There may also be less friction for scientists to suggest communal solutions to warming because, as Nisbet’s work has found, scientists tend to skew more liberal than the general population with more than 50 percent of one U.S. science society self-identifying as “liberal.” Given this outlook, they are more likely to accept efforts like cap and trade, a bill that, in implying a “cap” on activity, rubbed conservatives wrong.

|

|

A prolific law professor and psychologist at Yale, Dan Kahan has been charting how the public comes to, and understands, science. Photo courtesy of Dan Kahan.

|

“Not a lot of scientists would question if this is an effective policy,” Nisbet said.

It is not that scientists are unaware that they are moving into policy prescription, either. Most would intuitively know the line between their work and its political implications.

“I think many are aware when they’re crossing that line,” said Roger Pielke Jr., an environmental studies professor at the University of Colorado, Boulder, “but they’re not aware of the consequences [of] doing so.”

This willingness to cross into advocacy could also stem from the fact that it is the next logical skirmish. The battle for public opinion on the reality of human-driven climate change is already over, Pielke said, “and it’s been won … by the people calling for action.”

While there are slight fluctuations in public belief, in general a large majority of Americans side with what scientists say about the existence and causes of climate change. It’s not unanimous, he said, but it’s larger than the numbers who supported actions like the Montreal Protocol, the bank bailout or the Iraq War.

What has shifted has been its politicization: As more Republicans have begun to disbelieve global warming, Democrats have rallied to reinforce the science. And none of it is about the actual science, of course. It’s a fact Scripps’ Somerville now understands. It’s a code, speaking for fear of the policies that could happen if the science is accepted.

Doubters of warming don’t just hear the science. A policy is attached to it in their minds.

“Here’s a fact,” Pielke said. “And you have to change your entire lifestyle.”

For all the focus on how scientists talk to the public — whether Hansen has helped or hurt his cause — Yale’s Kahan ultimately thinks the discussion will mean very little. Ask most of the public who Hansen is, and they’ll mention something about the Muppets. It can be hard to accept, for scientists and journalists, but their efforts at communication are often of little consequence, he said.

“They’re not the primary source of information,” Kahan said.

‘A credible voice’

Like many of his peers, Somerville has suffered for his acts of advocacy.

“We all get hate email,” he said. “I’ve given congressional testimony and been denounced as an arrogant elitist hiding behind a discredited organization. Every time I’m on national news, I get a spike in ugly email. … I’ve received death threats.”

There are also pressures within the scientific community. As an elder statesman, Somerville does not have to worry about his career. But he tells young scientists to keep their heads down, working on technical papers. There is peer pressure to stay out of politics, a tension felt even by Somerville’s friend, the late Stephen Schneider, also at Stanford, who was long one of the country’s premier speakers on climate science.

He was publicly lauded, but many in the climate science community grumbled, Somerville said, that Schneider should “stop being a motormouth and start publishing technical papers.”

But there is a reason tradition has sustained the distinction between advising policymakers and picking solutions, one Krosnick’s work seems to ratify, said Michael Mann, a climatologist at Pennsylvania State University and a longtime target of climate contrarians.

“It is thoroughly appropriate, as a scientist, to discuss how our scientific understanding informs matters of policy, but … we should stop short of trying to prescribe policy,” Mann said. “This distinction is, in my view, absolutely critical.”

Somerville still supports the right of scientists to speak out as concerned citizens, as he has done, and as his friend, NASA’s Hansen, has done more stridently, protesting projects like the Keystone XL pipeline. As long as great care is taken to separate the facts from the political opinion, scientists should speak their minds.

“I don’t think being a scientist deprives you of the right to have a viewpoint,” he said.

Somerville often returns to a quote from the late Sherwood Rowland, a Nobel laureate from the University of California, Irvine, who discovered the threat chlorofluorocarbons posed to ozone: “What’s the use of having developed a science well enough to make predictions if, in the end, all we’re willing to do is stand around and wait for them to come true?”

Somerville asked Rowland several times whether the same held for global warming.

“Yes, absolutely,” he replied.

It’s an argument that Krosnick has heard from his own friends in climate science. But often this fine distinction gets lost in translation, as advocacy groups present the scientist’s personal message as the message of “science.” It’s luring to offer advice — Krosnick feels it himself when reporters call — but restraint may need to rule.

“In order to preserve a credible voice in public dialogue,” Krosnick said, “it might be that scientists such as myself need to restrain ourselves as speaking as public citizens.”

Broader efforts of communication, beyond scientists, could still mobilize the public, Nisbet said. Leave aside the third of the population who are in denial or alarmed about climate change, he said, and figure out how to make it relevant to the ambivalent middle.

“We have yet to really do that on climate change,” he said.

Somerville is continuing his efforts to improve communication from scientists. Another Bali declaration is unlikely, though. What he’d really like to do is get trusted messengers from different moral realms beyond science — leaders like the Dalai Lama — to speak repeatedly on climate change.

It’s all Somerville can do. It would be too painful to accept the other option, that climate change is like racism, war or poverty — problems the world has never abolished.

“[It] may well be that it is a problem that is too difficult for humanity to solve,” he said.

Gretchen Ertl for The New York TimesPacific Sea nettle jellyfish at the New England Aquarium in Boston. Zoos and aquariums are working to include educational elements about the environment without alienating visitors.

Gretchen Ertl for The New York TimesPacific Sea nettle jellyfish at the New England Aquarium in Boston. Zoos and aquariums are working to include educational elements about the environment without alienating visitors.

South Florida Earth First members protest outside the Platts Coal Properties and Investment Conference in West Palm Beach. (Photo by Bruce R. Bennett/Zum Press/Newscom)

South Florida Earth First members protest outside the Platts Coal Properties and Investment Conference in West Palm Beach. (Photo by Bruce R. Bennett/Zum Press/Newscom)

Especialistas reunidos em São Paulo para debater gestão de riscos dos extremos climáticos manifestam preocupação com dificuldades enfrentadas por jornalistas para lidar com a complexidade do tema (Wikimedia)

Especialistas reunidos em São Paulo para debater gestão de riscos dos extremos climáticos manifestam preocupação com dificuldades enfrentadas por jornalistas para lidar com a complexidade do tema (Wikimedia)

Software desenvolvido na USP de São Carlos cria e seleciona programas geradores de Árvores de Decisão, ferramentas capazes de fazer previsões. Pesquisa foi premiada nos Estados Unidos, no maior evento de computação evolutiva (Wikimedia)

Software desenvolvido na USP de São Carlos cria e seleciona programas geradores de Árvores de Decisão, ferramentas capazes de fazer previsões. Pesquisa foi premiada nos Estados Unidos, no maior evento de computação evolutiva (Wikimedia)

Toby Melville/Reuters

Toby Melville/Reuters

Prof Richard Muller considers himself a converted sceptic following the study’s surprise results. Photograph: Dan Tuffs for the Guardian

Prof Richard Muller considers himself a converted sceptic following the study’s surprise results. Photograph: Dan Tuffs for the Guardian

Você precisa fazer login para comentar.