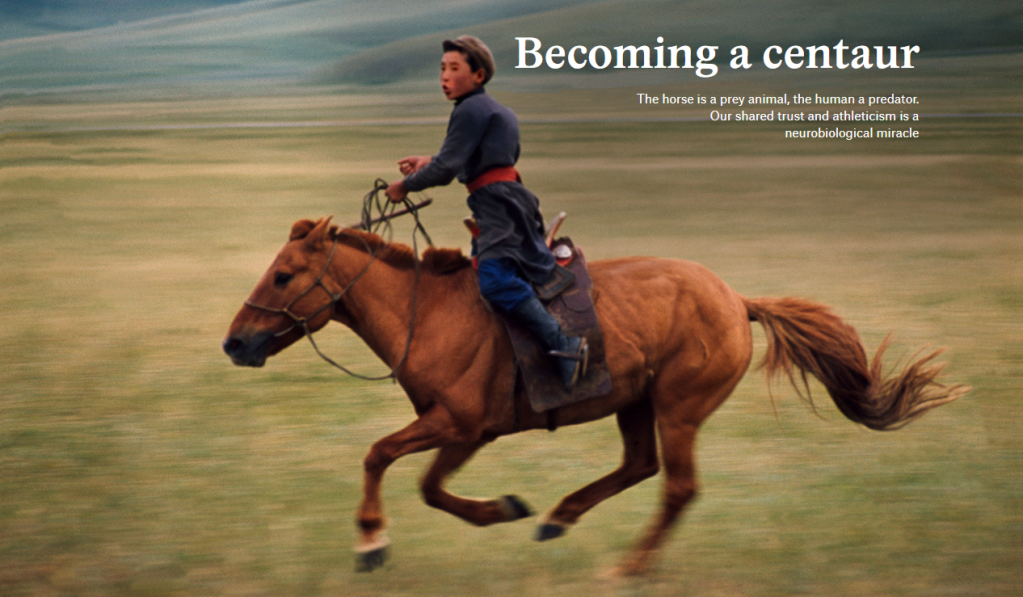

The horse is a prey animal, the human a predator. Our shared trust and athleticism is a neurobiological miracle

Janet Jones – 14 January 2022

Horse-and-human teams perform complex manoeuvres in competitions of all sorts. Together, we can gallop up to obstacles standing 8 feet (2.4 metres) high, leave the ground, and fly blind – neither party able to see over the top until after the leap has been initiated. Adopting a flatter trajectory with greater speed, horse and human sail over broad jumps up to 27 feet (more than 8 metres) long. We run as one at speeds of 44 miles per hour (nearly 70 km/h), the fastest velocity any land mammal carrying a rider can achieve. In freestyle dressage events, we dance in place to the rhythm of music, trot sideways across the centre of an arena with huge leg-crossing steps, and canter in pirouettes with the horse’s front feet circling her hindquarters. Galloping again, the best horse-and-human teams can slide 65 feet (nearly 20 metres) to a halt while resting all their combined weight on the horse’s hind legs. Endurance races over extremely rugged terrain test horses and riders in journeys that traverse up to 500 miles (805 km) of high-risk adventure.

No one disputes the athleticism fuelling these triumphs, but few people comprehend the mutual cross-species interaction that is required to accomplish them. The average horse weighs 1,200 pounds (more than 540 kg), makes instantaneous movements, and can become hysterical in a heartbeat. Even the strongest human is unable to force a horse to do anything she doesn’t want to do. Nor do good riders allow the use of force in training our magnificent animals. Instead, we hold ourselves to the higher standard of motivating horses to cooperate freely with us in achieving the goals of elite sports as well as mundane chores. Under these conditions, the horse trained with kindness, expertise and encouragement is a willing, equal participant in the action.

That action is rooted in embodied perception and the brain. In mounted teams, horses, with prey brains, and humans, with predator brains, share largely invisible signals via mutual body language. These signals are received and transmitted through peripheral nerves leading to each party’s spinal cord. Upon arrival in each brain, they are interpreted, and a learned response is generated. It, too, is transmitted through the spinal cord and nerves. This collaborative neural action forms a feedback loop, allowing communication from brain to brain in real time. Such conversations allow horse and human to achieve their immediate goals in athletic performance and everyday life. In a very real sense, each species’ mind is extended beyond its own skin into the mind of another, with physical interaction becoming a kind of neural dance.

Horses in nature display certain behaviours that tempt observers to wonder whether competitive manoeuvres truly require mutual communication with human riders. For example, the feral horse occasionally hops over a stream to reach good food or scrambles up a slope of granite to escape predators. These manoeuvres might be thought the precursors to jumping or rugged trail riding. If so, we might imagine that the performance horse’s extreme athletic feats are innate, with the rider merely a passenger steering from above. If that were the case, little requirement would exist for real-time communication between horse and human brains.

In fact, though, the feral hop is nothing like the trained leap over a competition jump, usually commenced from short distances at high speed. Today’s Grand Prix jump course comprises about 15 obstacles set at sharp angles to each other, each more than 5 feet high and more than 6 feet wide (1.5 x 1.8 metres). The horse-and-human team must complete this course in 80 or 90 seconds, a time allowance that makes for acute turns, diagonal flight paths and high-speed exits. Comparing the wilderness hop with the show jump is like associating a flintstone with a nuclear bomb. Horses and riders undergo many years of daily training to achieve this level of performance, and their brains share neural impulses throughout each experience.

These examples originate in elite levels of horse sport, but the same sort of interaction occurs in pastures, arenas and on simple trails all over the world. Any horse-and-human team can develop deep bonds of mutual trust, and learn to communicate using body language, knowledge and empathy.

Like it or not, we are the horse’s evolutionary enemy, yet they behave toward us as if inclined to become a friend

The critical component of the horse in nature, and her ability to learn how to interact so precisely with a human rider, is not her physical athleticism but her brain. The first precise magnetic resonance image of a horse’s brain appeared only in 2019, allowing veterinary neurologists far greater insight into the anatomy underlying equine mental function. As this new information is disseminated to horse trainers and riders for practical application, we see the beginnings of a revolution in brain-based horsemanship. Not only will this revolution drive competition to higher summits of success, and animal welfare to more humane levels of understanding, it will also motivate scientists to research the unique compatibility between prey and predator brains. Nowhere else in nature do we see such intense and intimate collaboration between two such disparate minds.

Three natural features of the equine brain are especially important when it comes to mind-melding with humans. First, the horse’s brain provides astounding touch detection. Receptor cells in the horse’s skin and muscles transduce – or convert – external pressure, temperature and body position to neural impulses that the horse’s brain can understand. They accomplish this with exquisite sensitivity: the average horse can detect less pressure against her skin than even a human fingertip can.

Second, horses in nature use body language as a primary medium of daily communication with each other. An alpha mare has only to flick an ear toward a subordinate to get him to move away from her food. A younger subordinate, untutored in the ear flick, receives stronger body language – two flattened ears and a bite that draws blood. The notion of animals in nature as kind, gentle creatures who never hurt each other is a myth.

Third, by nature, the equine brain is a learning machine. Untrammelled by the social and cognitive baggage that human brains carry, horses learn in a rapid, pure form that allows them to be taught the meanings of various human cues that shape equine behaviour in the moment. Taken together, the horse’s exceptional touch sensitivity, natural reliance on body language, and purity of learning form the tripod of support for brain-to-brain communication that is so critical in extreme performance.

One of the reasons for budding scientific fascination with neural horse-and-human communication is the horse’s status as a prey animal. Their brains and bodies evolved to survive completely different pressures than our human physiologies. For example, horse eyes are set on either side of their head for a panoramic view of the world, and their horizontal pupils allow clear sight along the horizon but fuzzy vision above and below. Their eyes rotate to maintain clarity along the horizon when their heads lie sideways to reach grass in odd locations. Equine brains are also hardwired to stream commands directly from the perception of environmental danger to the motor cortex where instant evasion is carried out. All of these features evolved to allow the horse to survive predators.

Conversely, human brains evolved in part for the purpose of predation – hunting, chasing, planning… yes, even killing – with front-facing eyes, superb depth perception, and a prefrontal cortex for strategy and reason. Like it or not, we are the horse’s evolutionary enemy, yet they behave toward us as if inclined to become a friend.

The fact that horses and humans can communicate neurally without the external mediation of language or equipment is critical to our ability to initiate the cellular dance between brains. Saddles and bridles are used for comfort and safety, but bareback and bridleless competitions prove they aren’t necessary for highly trained brain-to-brain communication. Scientific efforts to communicate with predators such as dogs and apes have often been hobbled by the use of artificial media including human speech, sign language or symbolic lexigram. By contrast, horses allow us to apply a medium of communication that is completely natural to their lives in the wild and in captivity.

The horse’s prey brain is designed to notice and evade predators. How ironic, and how riveting, then, that this prey brain is the only one today that shares neural communication with a predator brain. It offers humanity a rare view into a prey animal’s world, almost as if we were wolves riding elk or coyotes mind-melding with cottontail bunnies.

Highly trained horses and riders send and receive neural signals using subtle body language. For example, a rider can apply invisible pressure with her left inner calf muscle to move the horse laterally to the right. That pressure is felt on the horse’s side, in his skin and muscle, via proprioceptive receptor cells that detect body position and movement. Then the signal is transduced from mechanical pressure to electrochemical impulse, and conducted up peripheral nerves to the horse’s spinal cord. Finally, it reaches the somatosensory cortex, the region of the brain responsible for interpreting sensory information.

Riders can sometimes guess that an invisible object exists by detecting subtle equine reactions

This interpretation is dependent on the horse’s knowledge that a particular body signal – for example, inward pressure from a rider’s left calf – is associated with a specific equine behaviour. Horse trainers spend years teaching their mounts these associations. In the present example, the horse has learned that this particular amount of pressure, at this speed and location, under these circumstances, means ‘move sideways to the right’. If the horse is properly trained, his motor cortex causes exactly that movement to occur.

By means of our human motion and position sensors, the rider’s brain now senses that the horse has changed his path rightward. Depending on the manoeuvre our rider plans to complete, she will then execute invisible cues to extend or collect the horse’s stride as he approaches a jump that is now centred in his vision, plant his right hind leg and spin in a tight fast circle, push hard off his hindquarters to chase a cow, or any number of other movements. These cues are combined to form that mutual neural dance, occurring in real time, and dependent on natural body language alone.

The example of a horse moving a few steps rightward off the rider’s left leg is extremely simplistic. When you imagine a horse and rider clearing a puissance wall of 7.5 feet (2.4 metres), think of the countless receptor cells transmitting bodily cues between both brains during approach, flight and exit. That is mutual brain-to-brain communication. Horse and human converse via body language to such an extreme degree that they are able to accomplish amazing acts of understanding and athleticism. Each of their minds has extended into the other’s, sending and receiving signals as if one united brain were controlling both bodies.

Analysis of brain-to-brain communication between horses and humans elicits several new ideas worthy of scientific notice. Because our minds interact so well using neural networks, horses and humans might learn to borrow neural signals from the party whose brain offers the highest function. For example, horses have a 340-degree range of view when holding their heads still, compared with a paltry 90-degree range in humans. Therefore, horses can see many objects that are invisible to their riders. Yet riders can sometimes guess that an invisible object exists by detecting subtle equine reactions.

Specifically, neural signals from the horse’s eyes carry the shape of an object to his brain. Those signals are transferred to the rider’s brain by a well-established route: equine receptor cells in the retina lead to equine detector cells in the visual cortex, which elicits an equine motor reaction that is then sensed by the rider’s human body. From there, the horse’s neural signals are transmitted up the rider’s spinal cord to the rider’s brain, and a perceptual communication loop is born. The rider’s brain can now respond neurally to something it is incapable of seeing, by borrowing the horse’s superior range of vision.

These brain-to-brain transfers are mutual, so the learning equine brain should also be able to borrow the rider’s vision, with its superior depth perception and focal acuity. This kind of neural interaction results in a horse-and-human team that can sense far more together than either party can detect alone. In effect, they share effort by assigning labour to the party whose skills are superior at a given task.

There is another type of skillset that requires a particularly nuanced cellular dance: sharing attention and focus. Equine vigilance allowed horses to survive 56 million years of evolution – they had to notice slight movements in tall grasses or risk becoming some predator’s dinner. Consequently, today it’s difficult to slip even a tiny change past a horse, especially a young or inexperienced animal who has not yet been taught to ignore certain sights, sounds and smells.

By contrast, humans are much better at concentration than vigilance. The predator brain does not need to notice and react instantly to every stimulus in the environment. In fact, it would be hampered by prey vigilance. While reading this essay, your brain sorts away the sound of traffic past your window, the touch of clothing against your skin, the sight of the masthead that says ‘Aeon’ at the top of this page. Ignoring these distractions allows you to focus on the content of this essay.

Horses and humans frequently share their respective attentional capacities during a performance. A puissance horse galloping toward an enormous wall cannot waste vigilance by noticing the faces of each person in the audience. Likewise, the rider cannot afford to miss a loose dog that runs into the arena outside her narrow range of vision and focus. Each party helps the other through their primary strengths.

Such sharing becomes automatic with practice. With innumerable neural contacts over time, the human brain learns to heed signals sent by the equine brain that say, in effect: ‘Hey, what’s that over there?’ Likewise, the equine brain learns to sense human neural signals that counter: ‘Let’s focus on this gigantic wall right here.’ Each party sends these messages by body language and receives them by body awareness through two spinal cords, then interprets them inside two brains, millisecond by millisecond.

The rider’s physical cues are transmitted by neural activation from the horse’s surface receptors to the horse’s brain

Finally, it is conceivable that horse and rider can learn to share features of executive function – the human brain’s ability to set goals, plan steps to achieve them, assess alternatives, make decisions and evaluate outcomes. Executive function occurs in the prefrontal cortex, an area that does not exist in the equine brain. Horses are excellent at learning, remembering and communicating – but they do not assess, decide, evaluate or judge as humans do.

Shying is a prominent equine behaviour that might be mediated by human executive function in well-trained mounts. When a horse of average size shies away from an unexpected stimulus, riders are sitting on top of 1,200 pounds of muscle that suddenly leaps sideways off all four feet and lands five yards away. It’s a frightening experience, and often results in falls that lead to injury or even death. The horse’s brain causes this reaction automatically by direct connection between his sensory and motor cortices.

Though this possibility must still be studied by rigorous science, brain-to-brain communication suggests that horses might learn to borrow small glimmers of executive function through neural interaction with the human’s prefrontal cortex. Suppose that a horse shies from an umbrella that suddenly opens. By breathing steadily, relaxing her muscles, and flexing her body in rhythm with the horse’s gait, the rider calms the animal using body language. Her physical cues are transmitted by neural activation from his surface receptors to his brain. He responds with body language in which his muscles relax, his head lowers, and his frightened eyes return to their normal size. The rider feels these changes with her body, which transmits the horse’s neural signals to the rider’s brain.

From this point, it’s only a very short step – but an important one – to the transmission and reception of neural signals between the rider’s prefrontal cortex (which evaluates the unexpected umbrella) and the horse’s brain (which instigates the leap away from that umbrella). In practice, to reduce shying, horse trainers teach their young charges to slow their reactions and seek human guidance.

Brain-to-brain communication between horses and riders is an intricate neural dance. These two species, one prey and one predator, are living temporarily in each other’s brains, sharing neural information back and forth in real time without linguistic or mechanical mediation. It is a partnership like no other. Together, a horse-and-human team experiences a richer perceptual and attentional understanding of the world than either member can achieve alone. And, ironically, this extended interspecies mind operates well not because the two brains are similar to each other, but because they are so different.

Janet Jones applies brain research to training horses and riders. She has a PhD from the University of California, Los Angeles, and for 23 years taught the neuroscience of perception, language, memory, and thought. She trained horses at a large stable early in her career, and later ran a successful horse-training business of her own. Her most recent book, Horse Brain, Human Brain (2020), is currently being translated into seven languages.

Edited by Pam Weintraub

Você precisa fazer login para comentar.