RESUMO Há 50 anos, o físico norte-irlandês John Bell (1928-90) chegou a um resultado que demonstra a natureza “fantasmagórica” da realidade no mundo atômico e subatômico. Seu teorema é hoje visto como a arma mais eficaz contra a espionagem, algo que garantirá, num futuro talvez próximo, a privacidade absoluta das informações.

*

Um país da América do Sul quer manter a privacidade de suas informações estratégicas, mas se vê obrigado a comprar os equipamentos para essa tarefa de um país bem mais avançado tecnologicamente. Esses aparelhos, porém, podem estar “grampeados”.

Surge, então, a dúvida quase óbvia: haverá, no futuro, privacidade 100% garantida? Sim. E isso vale até mesmo para um país que compre a tecnologia antiespionagem do “inimigo”.

O que possibilita a resposta afirmativa acima é o resultado que já foi classificado como o mais profundo da ciência: o teorema de Bell, que trata de uma das perguntas filosóficas mais agudas e penetrantes feitas até hoje e que alicerça o próprio conhecimento: o que é a realidade? O teorema -que neste ano completou seu 50º aniversário- garante que a realidade, em sua dimensão mais íntima, é inimaginavelmente estranha.

|

José Patricio |

|

|

|

A história do teorema, de sua comprovação experimental e de suas aplicações modernas tem vários começos. Talvez, aqui, o mais apropriado seja um artigo publicado em 1935 pelo físico de origem alemã Albert Einstein (1879-1955) e dois colaboradores, o russo Boris Podolsky (1896-1966) e o americano Nathan Rosen (1909-95).

Conhecido como paradoxo EPR (iniciais dos sobrenomes dos autores), o experimento teórico ali descrito resumia uma longa insatisfação de Einstein com os rumos que a mecânica quântica, a teoria dos fenômenos na escala atômica, havia tomado. Inicialmente, causou amargo no paladar do autor da relatividade o fato de essa teoria, desenvolvida na década de 1920, fornecer apenas a probabilidade de um fenômeno ocorrer. Isso contrastava com a “certeza” (determinismo) da física dita clássica, a que rege os fenômenos macroscópicos.

Einstein, na verdade, estranhava sua criatura, pois havia sido um dos pais da teoria quântica. Com alguma relutância inicial, o indeterminismo da mecânica quântica acabou digerido por ele. Algo, porém, nunca lhe passou pela garganta: a não localidade, ou seja, o estranhíssimo fato de algo aqui influenciar instantaneamente algo ali -mesmo que esse “ali” esteja muito distante. Einstein acreditava que coisas distantes tinham realidades independentes.

Einstein chegou a comparar -vale salientar que é só uma analogia- a não localidade a um tipo de telepatia. Mas a definição mais famosa dada por Einstein a essa estranheza foi “fantasmagórica ação a distância”.

EMARANHADO

A essência do argumento do paradoxo EPR é o seguinte: sob condições especiais, duas partículas que interagiram e se separaram acabam em um estado denominado emaranhado, como se fossem “gêmeas telepáticas”. De forma menos pictórica, diz-se que as partículas estão conectadas (ou correlacionadas, como preferem os físicos) e assim seguem, mesmo depois da interação.

A estranheza maior vem agora: se uma das partículas desse par for perturbada -ou seja, sofrer uma medida qualquer, como dizem os físicos-, a outra “sente” essa perturbação instantaneamente. E isso independe da distância entre as duas partículas. Podem estar separadas por anos-luz.

Os autores do paradoxo EPR diziam que era impossível imaginar que a natureza permitisse a conexão instantânea entre os dois objetos. E, por meio de argumentação lógica e complexa, Einstein, Podolsky e Rosen concluíam: a mecânica quântica tem que ser incompleta. Portanto, provisória.

SUPERIOR À LUZ?

Uma leitura apressada (porém, muito comum) do paradoxo EPR é dizer que uma ação instantânea (não local, no vocabulário da física) é impossível, porque violaria a relatividade de Einstein: nada pode viajar com velocidade superior à da luz no vácuo, 300 mil km/s.

No entanto, a não localidade atuaria apenas na dimensão microscópica -não pode ser usada, por exemplo, para mandar ou receber mensagens. No mundo macroscópico, se quisermos fazer isso, teremos que usar sinais que nunca viajam com velocidade maior que a da luz no vácuo. Ou seja, relatividade é preservada.

A não localidade tem a ver com conexões persistentes (e misteriosas) entre dois objetos: interferir com (alterar, mudar etc.) um deles interfere com (altera, muda etc.) o outro. Instantaneamente. O simples ato de observar um deles interfere no estado do outro.

Einstein não gostou da versão final do artigo de 1935, que só viu impressa -a redação ficou a cargo de Podolsky. Ele havia imaginado um texto menos filosófico. Pouco meses depois, viria a resposta do físico dinamarquês Niels Bohr (1885-1962) ao EPR -poucos anos antes, Einstein e Bohr haviam protagonizado o que para muitos é um dos debates filosóficos mais importantes da história: o tema era a “alma da natureza”, nas palavras de um filósofo da física.

Em sua resposta ao EPR, Bohr reafirmou tanto a completude da mecânica quântica quanto sua visão antirrealista do universo atômico: não é possível dizer que uma entidade quântica (elétron, próton, fóton etc.) tenha uma propriedade antes que esta seja medida. Ou seja, tal propriedade não seria real, não estaria oculta à espera de um aparelho de medida ou qualquer interferência (até mesmo o olhar) do observador. Quanto a isso, Einstein, mais tarde, ironizaria: “Será que a Lua só existe quando olhamos para ela?”.

AUTORIDADE

Um modo de entender o que seja uma teoria determinista é o seguinte: é aquela na qual se pressupõe que a propriedade a ser medida está presente (ou “escondida”) no objeto e pode ser determinada com certeza. Os físicos denominam esse tipo de teoria com um nome bem apropriado: teoria de variáveis ocultas.

Em uma teoria de variáveis ocultas, a tal propriedade (conhecida ou não) existe, é real. Daí, por vezes, os filósofos classificarem esse cenário como realismo -Einstein gostava do termo “realidade objetiva”: as coisas existem sem a necessidade de serem observadas.

Mas, na década de 1930, um teorema havia provado que seria impossível haver uma versão da mecânica quântica como uma teoria de variáveis ocultas. O feito era de um dos maiores matemáticos de todos os tempos, o húngaro John von Neumann (1903-57). E, fato não raro na história da ciência, valeu o argumento da autoridade em vez da autoridade do argumento.

O teorema de Von Neumann era perfeito do ponto de vista matemático, mas “errado, tolo” e “infantil” (como chegou a ser classificado) no âmbito da física, pois partia de uma premissa equivocada. Sabe-se hoje que Einstein desconfiou dessa premissa: “Temos que aceitar isso como verdade?”, perguntou a dois colegas. Mas não foi além.

O teorema de Von Neumann serviu, porém, para praticamente pisotear a versão determinista (portanto, de variáveis ocultas) da mecânica quântica feita em 1927 pelo nobre francês Louis de Broglie (1892-1987), Nobel de Física de 1929, que acabou desistindo dessa linha de pesquisa.

Por exatas duas décadas, o teorema de Von Neumann e as ideias de Bohr -que formou em torno dele uma influente escola de jovens notáveis- dissuadiram tentativas de buscar uma versão determinista da mecânica quântica.

Mas, em 1952, o físico norte-americano David Bohm (1917-92), inspirado pelas ideias de De Broglie, apresentou uma versão de variáveis ocultas da mecânica quântica -hoje, denominada mecânica quântica bohmiana, homenagem ao pesquisador que trabalhou na década de 1950 na Universidade de São Paulo (USP), quando perseguido nos EUA pelo macarthismo.

A mecânica quântica bohmiana tinha duas características em sua essência: 1) era determinista (ou seja, de variáveis ocultas); 2) era não local (isto é, admitia a ação a distância) -o que fez com que Einstein, localista convicto, perdesse o interesse inicial nela.

PROTAGONISTA

Eis que entra em cena a principal personagem desta história: o físico norte-irlandês John Stewart Bell, que, ao tomar conhecimento da mecânica bohmiana, teve uma certeza: o “impossível havia sido feito”. Mais: Von Neumann estava errado.

A mecânica quântica de Bohm -ignorada logo de início pela comunidade de físicos- acabava de cair em terreno fértil: Bell remoía, desde a universidade, como um “hobby”, os fundamentos filosóficos da mecânica quântica (EPR, Von Neumann, De Broglie etc.). E tinha tomado partido nesses debates: era um einsteiniano assumido e achava Bohr obscuro.

Bell nasceu em 28 de junho de 1928, em Belfast, em uma família anglicana sem posses. Deveria ter parado de estudar aos 14 anos, mas, por insistência da mãe, que percebeu os dotes intelectuais do segundo de quatro filhos, foi enviado a uma escola técnica de ensino médio, onde ele aprendeu coisas práticas (carpintaria, construção civil, biblioteconomia etc.).

Formado, aos 16, tentou empregos em escritórios, mas o destino quis que terminasse como técnico preparador de experimentos no departamento de física da Queen’s University, também em Belfast.

Os professores do curso logo perceberam o interesse do técnico pela física e passaram a incentivá-lo, com indicações de leituras e aulas. Com uma bolsa de estudos, Bell se formou em 1948 em física experimental e, no ano seguinte, em física matemática. Em ambos os casos, com louvor.

De 1949 a 1960, Bell trabalhou no Aere (Estabelecimento para a Pesquisa em Energia Atômica), em Harwell, no Reino Unido. Lá conheceria sua futura mulher, a física Mary Ross, sua interlocutora em vários trabalhos sobre física. “Ao olhar novamente esses artigos, vejo-a em todo lugar”, disse Bell, em homenagem recebida em 1987, três anos antes de morrer, de hemorragia cerebral.

Defendeu doutorado em 1956, após um período na Universidade de Birmingham, sob orientação do físico teuto-britânico Rudolf Peierls (1907-95). A tese inclui uma prova de um teorema muito importante da física (teorema CPT), que havia sido descoberto pouco antes por um contemporâneo seu.

O TEOREMA

Por discordar dos rumos das pesquisas no Aere, o casal decidiu trocar empregos estáveis por posições temporárias no Centro Europeu de Pesquisas Nucleares (Cern), em Genebra (Suíça). Ele na divisão de física teórica; ela, na de aceleradores.

Bell passou 1963 e 1964 trabalhando nos EUA. Lá, encontrou tempo para se dedicar a seu “hobby” intelectual e gestar o resultado que marcaria sua carreira e lhe daria, décadas mais tarde, fama.

Ele se fez a seguinte pergunta: será que a não localidade da teoria de variáveis ocultas de Bohm seria uma característica de qualquer teoria realista da mecânica quântica? Em outras palavras, se as coisas existirem sem serem observadas, elas terão que necessariamente estabelecer entre si aquela fantasmagórica ação a distância?

O teorema de Bell, publicado em 1964, é também conhecido como desigualdade de Bell. Sua matemática não é complexa. De forma muito simplificada, podemos pensar nesse teorema como uma inequação: x ≤ 2 (x menor ou igual a dois), sendo que “x” representa, para nossos propósitos aqui, os resultados de um experimento.

As consequências mais interessantes do teorema de Bell ocorreriam se tal experimento violasse a desigualdade, ou seja, mostrasse que x > 2 (x maior que dois). Nesse caso, teríamos de abrir mão de uma das duas suposições: 1) realismo (as coisas existem sem serem observadas); 2) da localidade (o mundo quântico não permite conexões mais velozes que a luz).

O artigo do teorema não teve grande repercussão -Bell havia feito outro antes, fundamental para ele chegar ao resultado, mas, por erro do editor do periódico, acabou publicado só em 1966.

REBELDIA A retomada das ideias de Bell -e, por conseguinte, do EPR e de Bohm- ganhou momento com fatores externos à física. Muitos anos depois do agitado final dos anos 1960, o físico americano John Clauser recordaria o período: ”A Guerra do Vietnã dominava os pensamentos políticos da minha geração. Sendo um jovem físico naquele período revolucionário, eu naturalmente queria chacoalhar o mundo”.

A ciência, como o resto do mundo, acabou marcada pelo espírito da geração paz e amor; pela luta pelos direitos civis; por maio de 1968; pelas filosofias orientais; pelas drogas psicodélicas; pela telepatia -em uma palavra: pela rebeldia. Que, traduzida para a física, significava se dedicar a uma área herética na academia: interpretações (ou fundamentos) da mecânica quântica. Mas fazer isso aumentava consideravelmente as chances de um jovem físico arruinar sua carreira: EPR, Bohm e Bell eram considerados temas filosóficos, e não físicos.

O elemento final para que o campo tabu de estudos ganhasse fôlego foi a crise do petróleo de 1973, que diminuiu a oferta de postos para jovens pesquisadores -incluindo físicos. À rebeldia somou-se a recessão.

Clauser, com mais três colegas, Abner Shimony, Richard Holt e Michael Horne, publicou suas primeiras ideias sobre o assunto em 1969, com o título “Proposta de Experimento para Testar Teorias de Variáveis Ocultas”. O quarteto fez isso em parte por ter notado que a desigualdade de Bell poderia ser testada com fótons, que são mais fáceis de serem gerados. Até então se pensava em arranjos experimentais mais complicados.

Em 1972, a tal proposta virou experimento -feito por Clauser e Stuart Freedman (1944-2012)-, e a desigualdade de Bell foi violada.

O mundo parecia ser não local -ironicamente, Clauser era localista! Mas só parecia: o experimento seguiu, por cerca de uma década, incompreendido e, portanto, desconsiderado pela comunidade de físicos. Mas aqueles resultados serviram a reforçar algo importante: fundamentos da mecânica quântica não eram só filosofia. Eram também física experimental.

MUDANÇA DE CENÁRIO

O aperfeiçoamento de equipamentos de óptica (incluindo lasers) permitiu que, em 1982, um experimento se tornasse um clássico da área.

Pouco antes, o físico francês Alain Aspect havia decidido iniciar um doutorado tardio, mesmo sendo um físico experimental experiente. Escolheu como tema o teorema de Bell. Foi ao encontro do colega norte-irlandês no Cern. Em entrevista ao físico Ivan dos Santos Oliveira, do Centro Brasileiro de Pesquisas Físicas, no Rio de Janeiro, e ao autor deste texto, Aspect contou o seguinte diálogo entre ele e Bell. “Você tem um cargo estável?”, perguntou Bell. “Sim”, disse Aspect. Caso contrário, “você seria muito pressionado a não fazer o experimento”, disse Bell.

O diálogo relatado por Aspect nos permite afirmar que, quase duas décadas depois do artigo seminal de 1964, o tema continuava revestido de preconceito.

Em um experimento feito com pares de fótons emaranhados, a natureza, mais uma vez, mostrou seu caráter não local: a desigualdade de Bell foi violada. Os dados mostraram x > 2. Em 2007, por exemplo, o grupo do físico austríaco Anton Zeilinger verificou a violação da desigualdade usando fótons separados por… 144 km.

Na entrevista no Brasil, Aspect disse que, até então, o teorema era pouquíssimo conhecido pelos físicos, mas ganharia fama depois de sua tese de doutorado, de cuja banca, aliás, Bell participou.

ESTRANHO

Afinal, por que a natureza permite que haja a “telepatia” einsteiniana? É no mínimo estranho pensar que uma partícula perturbada aqui possa, de algum modo, alterar o estado de sua companheira nos confins do universo.

Há várias maneiras de interpretar as consequências do que Bell fez. De partida, algumas (bem) equivocadas: 1) a não localidade não pode existir, porque viola a relatividade; 2) teorias de variáveis ocultas (Bohm, De Broglie etc.) da mecânica quântica estão totalmente descartadas; 3) a mecânica quântica é realmente indeterminista; 4) o irrealismo -ou seja, coisas só existem quando observadas- é a palavra final. A lista é longa.

Quando o teorema foi publicado, uma leitura rasa (e errônea) dizia que ele não tinha importância, pois o teorema de Von Neumann já havia descartado as variáveis ocultas, e a mecânica quântica seria, portanto, de fato indeterminista. Entre os que não aceitam a não localidade, há ainda aqueles que chegam ao ponto de dizer que Einstein, Bohm e Bell não entenderam o que fizeram.

O filósofo da física norte-americano Tim Maudlin, da Universidade de Nova York, em dois excelentes artigos, “What Bell Did” (O que Bell fez, arxiv.org/abs/1408.1826) e “Reply to Werner” (em que responde a comentários sobre o texto anterior, arxiv.org/abs/1408.1828), oferece uma longa lista de equívocos.

Para Maudlin, renomado em sua área, o teorema de Bell e sua violação significam uma só coisa: a natureza é não local (“fantasmagórica”) e, portanto, não há esperança para a localidade, como Einstein gostaria -nesse sentido, pode-se dizer que Bell mostrou que Einstein estava errado. Assim, qualquer teoria determinista (realista) que reproduza os resultados experimentais obtidos até hoje pela mecânica quântica -por sinal, a teoria mais precisa da história da ciência- terá que necessariamente ser não local.

De Aspect até hoje, desenvolvimentos tecnológicos importantes possibilitaram algo impensável há poucas décadas: estudar isoladamente uma entidade quântica (átomo, elétron, fóton etc.). E isso deu início à área de informação quântica, que abrange o estudo da criptografia quântica -aquela que permitirá a segurança absoluta dos dados- e o dos computadores quânticos, máquinas extremamente velozes. De certo modo, trata-se de filosofia transformada em física experimental.

Muitos desses avanços se devem basicamente à rebeldia de uma geração de físicos jovens que queriam contrariar o “sistema”.

Uma história saborosa desse período está em “How the Hippies Saved Physics” (Como os hippies salvaram a física, publicado pela W. W. Norton & Company em 2011), do historiador da física norte-americano David Kaiser. E uma análise histórica detalhada em “Quantum Dissidents: Research on the Foundations of Quantum Theory circa 1970” (Dissidentes do quantum: pesquisa sobre os fundamentos da teoria quântica por volta de 1970, bit.ly/1xyipTJ, só para assinantes), do historiador da física Olival Freire Jr., da Universidade Federal da Bahia.

Para os mais interessados no viés filosófico, há os dois volumes premiados de “Conceitos de Física Quântica” (Editora Livraria da Física, 2003), do físico e filósofo Osvaldo Pessoa Jr., da USP.

PRIVACIDADE

A esta altura, o(a) leitor(a) talvez esteja se perguntando sobre o que o teorema de Bell tem a ver com uma privacidade 100% garantida.

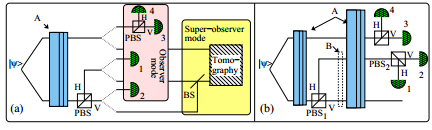

No futuro, é (bem) provável que a informação seja enviada e recebida na forma de fótons emaranhados. Pesquisas recentes em criptografia quântica garantem que bastaria submeter essas partículas de luz ao teste da desigualdade de Bell. Se ela for violada, então não há nenhuma possibilidade de a mensagem ter sido bisbilhotada indevidamente. E o teste independe do equipamento usado para enviar ou receber os fótons. A base teórica para isso está, por exemplo, em “The Ultimate Physical Limits of Privacy” (Limites físicos extremos da privacidade), de Artur Ekert e Renato Renner (bit.ly/1gFjynG, só para assinantes).

Em um futuro não muito distante, talvez, o teorema de Bell se transforme na arma mais poderosa contra a espionagem. Isso é um tremendo alento para um mundo que parece rumar à privacidade zero. É também um imenso desdobramento de uma pergunta filosófica que, segundo o físico norte-americano Henry Stapp, especialista em fundamentos da mecânica quântica, se tornou “o resultado mais profundo da ciência”. Merecidamente. Afinal, por que a natureza optou pela “ação fantasmagórica a distância”?

A resposta é um mistério. Pena que a pergunta não seja nem sequer mencionada nas graduações de física no Brasil.

CÁSSIO LEITE VIEIRA, 54, jornalista do Instituto Ciência Hoje (RJ), é autor de “Einstein – O Reformulador do Universo” (Odysseus).

JOSÉ PATRÍCIO, 54, artista plástico pernambucano, participa da mostra “Asas a Raízes” na Caixa Cultural do Rio, de 17/1 a 15/3.